Behind every guitar god there is, literally, a drummer making the odd 7/8 or 5/4 bar sound like 4/4. Paraphrasing Einstein, true guitar gods don’t play at dice or outside of a strict 4/4. (Inspired by: what the heck even is “Black Dog”?)

Squeeze out the trickiest part of the problem, another part of the problem becomes the trickiest. A tale as old as time.

Today, it seems like the biggest opportunities will be in the third of my opening statements. Building systems remains hard. Can I assume you’re familiar with Amdahl’s Law? That’s what’s going on: a massive speed up on a portion of the problem, but as that portion speeds up it becomes less and less of a contributor to the overall speedup. Lowering the costs of the rest of the problem is work that remains to be done. It’s going to take a long time, because the real world is fully of sticky problems, surprising feedback loops, human stubbornness, and the occasional adversary.

– Marc Brooker, You Are Here

You squeeze out coding time, you’re still left with open problems like:

- Running software in isolated, trusted environments (sandboxes) and coordinating work between programs (agents, databases, etc.).

- Human think time, which generates things like taste, and deciding to include this feature instead of that feature because intuition says it will improve the software.

- Coordination and collaboration between humans, which generates recurring meetings (no, thanks) but also great ideas out of nowhere (that’s why we’re here!)

- Verifying what you’ve built and vetting that what you’re proposing to integrate (merge) into the software is any good.

Be careful not to squeeze out the costs that are actually valuable. Otherwise, you might end up with inversions like banks that operate vast airborne transit networks at a loss.

Blogging (Classic)

NetNewsWire was one of the first, if not the first, Mac OS X apps that I bought for my first Mac, an iBook G3. Indulge me some nostalgia for a moment.

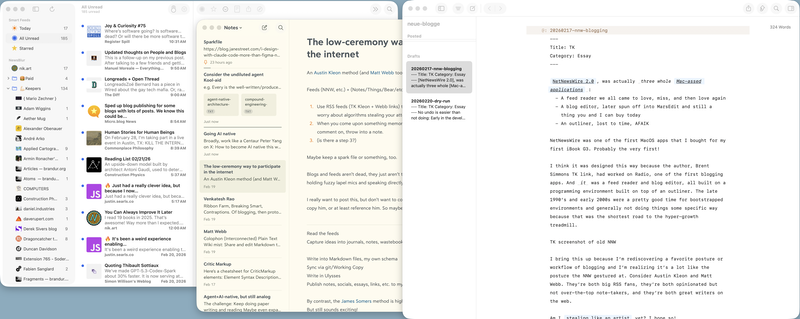

NetNewsWire 2.0, was three whole Mac-assed applications:

- A feed reader we all came to love, miss, and then love again

- A blog editor, later spun off into MarsEdit and still a thing you and I can buy today

- An outliner, lost to time, AFAIK

I think this design came about because the author, Brent Simmons, had worked on Radio, one of the first blogging apps. And it was a feed reader and blog editor, all built on a programming environment built on top of an outliner.

The late 1990s and early 2000s were a pretty good time for bootstrapped environments! And, generally not doing things some specific way because that was the shortest road to the hyper-growth treadmill.

I mention this because I’m rediscovering a blogging workflow and realizing it’s a lot like what the NNW of old gestured at. Consider Austin Kleon and Matt Webb. They’re both long-time RSS fans, opinionated but not over-the-top note-takers, and they’re great writers on the web. Clearly, they’ve got something figured out. They both read websites via NNW, throw the good stuff into their notes (outlines), and then mix the good stuff together into a blog editor and hit publish.

To stand on their shoulders a bit, here’s me doing my spin on their workflow:

Am I stealing like an artist yet? I hope so!

This setup won’t conquer writer’s block or slop out a newsletter opinion piece for you. But it’s a pretty dang good way to arrange the web, on your desk per se, so that you stay in your zone, not some algorithm’s. And it arranges the world so that neat ideas roll downhill from your feed reader to your notes and into your editor for digestion and posting to your blog.

This is a recommended way of writing on the web and thinking about the world.

I love when/what Matt Webb builds:

It should be SO EASY to share + collaborate on Markdown text files. The AI world runs on .md files. Yet frictionless Google Docs-style collab is so hard… UNTIL NOW, and how about that for a tease.

We need more “this (thing) should be as easy to collaborate on as Google Docs” sort of things in the world. Granted, I’m not a fan of writing in or using Google Docs! But the collaboration model is right and people know how to use it. May many more bloom.

Contra my optimism on software estimates, some realism:

I gather as much political context as possible before I even look at the code. How much pressure is on this project? Is it a casual ask, or do we have to find a way to do this? What kind of estimate is my management chain looking for? There’s a huge difference between “the CTO really wants this in one week” and “we were looking for work for your team and this seemed like it could fit”.

Ideally, I go to the code with an estimate already in hand. Instead of asking myself “how long would it take to do this”, where “this” could be any one of a hundred different software designs, I ask myself “which approaches could be done in one week?“. – Sean Goedecke, How I estimate work as a staff software engineer

Folks with the best intentions have asked me “How long would this project take? Please say two weeks, that’s how much time we have.” Well maybe I embellished the second part. Point is, too often I interpreted this as asking the question, and not asking for an answer in the time-is-scarce context we were actually operating in.

We often didn’t like the answer. Too much scope cut to fit in their time frame (aka appetite) or not enough detail to decide where to trade scope for time.

In other words, I don’t “break down the work to determine how long it will take”. My management chain already knows how long they want it to take. My job is to figure out the set of software approaches that match that estimate.

Sometimes, you make your coin by delivering exactly what was asked for, sometimes you make it by solving the problem in a novel way. Every so often, you make it by saying no and choosing to do something with better trade-offs.

Astronomy is perhaps the science whose discoveries owe least to change, in which human understanding appears in its whole magnitude, and through which man can best learn how small he is. – Georg Christoph Lichtenberg, The Waste Books

You can’t argue with a star!

I went to see an elephant about a birthday.

Dave Rupert, Write about the future you want:

There’s a lot that’s not going well; politics, tech bubbles, the economy, and so on. I spend most of my day reading angry tweets and blog posts. There’s a lot to be upset about, so that’s understandable. But in the interest of fostering better discourse, I’d like to offer a challenge that I think the world desperately needs right now: It’s cheap and easy to complain and say “[Thing] is bad”, but it’s also free to share what you think would be better.

Co-signed.

This is not my beautiful agent-driven economy

We’re in a weird agent coding moment here. AI maximalists are putting wild, often incoherent, projects out there. Some, seemingly replacing their writing output with LLM-trained output. (I don’t understand the temptation to that one at all. The interesting thing about writing, as with any performance art, is risk!) Others, merely annoying the heck out of each other with crustacean emoji.

It feels like we’ve got two groups pursuing entirely different paths to the next level of agent coding. Both camps are not even wrong:

- The open problems path: we need to figure out correctness, guardrails, what this means for teams, etc. Only then can we be certain we’re not just burning tokens in pursuit of software we’ll have to clean up or write off as brown-field development in the next 3–6 months. This isn’t even the AI safety crowd of 12–18 months ago. I would have called these folks pragmatists just a couple of months ago.

- The maximal/acceleration path: figure out how to get the agents working faster so that they solve the problems for us. Build the system that solves the problem, mostly through throwing more agents and tokens at any given problem. These aren’t the (non-political) accelerationists who foresee AI and blockchains coming together to allow people to nope out of civilization and run their own AI-enabled private cities and enclaves. I would have called these folks the hype train conductors a few months ago.

I’m inclined to pursue open problems (validation and verification, collaboration, guardrails) before dialing up the output and delegation (orchestration and parallelism). The silver lining is we’re going to find out (possibly quickly) which camp is relatively right (Has Some Good Points) in addition to not even wrong. 🙃

Below, some solid ideas others have had about this tension between “well there are open problems…” and “…jump in with two feet anyway!”.

Justin Searls asked when we would see this kind of surplus of software production and surfeit?/deficit of taste. It seems like it’s just around the corner and the two following links are the early recognition. In other words, he was only off by a few months.

Armin Ronacher, Agent Psychosis: Are We Going Insane?:

The thing is that the dopamine hit from working with these agents is so very real. I’ve been there! You feel productive, you feel like everything is amazing, and if you hang out just with people that are into that stuff too, without any checks, you go deeper and deeper into the belief that this all makes perfect sense. You can build entire projects without any real reality check. But it’s decoupled from any external validation. For as long as nobody looks under the hood, you’re good. But when an outsider first pokes at it, it looks pretty crazy.

It takes you a minute of prompting and waiting a few minutes for code to come out of it. But actually honestly reviewing a pull request takes many times longer than that. The asymmetry is completely brutal. Shooting up bad code is rude because you completely disregard the time of the maintainer. But everybody else is also creating AI-generated code, but maybe they passed the bar of it being good. So how can you possibly tell as a maintainer when it all looks the same? And as the person writing the issue or the PR, you felt good about it. Yet what you get back is frustration and rejection.

This is what I’ve been saying since the summer. We are in the middle of software engineering’s productivity crisis.

LLMs inflate all previous productivity metrics (PRs, commits) without a correlation to value. This will be used to justify layoffs in Q1/Q2 of this year.

2026 gonna be wild, and we’re only one month in.

I’ve been reading Power Broker on and off throughout the year (brag). I’m almost five hundred pages in (brag again) and it’s worth it. The writing is as good as the research is deep. Which is to say, it manages to maintain a great pace even though it’s a huge book (brag the third). Caro’s pacing, by alternating between moving the Robert Moses story ahead and adding color by telling the brief story of the minor characters involved in Moses’ story at the time.

Furthermore, Robert Moses was a real jerk. And, a bully. 🤦♂️ Same as it ever was.

I’m continuing with the Dune chronicles. Children of Dune seemed like all setup and only perfunctory pay-off. God Emperor felt like it had more to say, whether it’s philosophical or political. The three thousand year jump ahead is a lot, though!

As a palate cleanser between Dune novels and Power Broker chapters: just started Liberation Day by George Saunders and Iberia by James Michener. 📈

If you have significant previous coding experience - even if it’s a few years stale - you can drive these things really effectively. Especially if you have management experience, quite a lot of which transfers to “managing” coding agents - communicate clearly, set achievable goals, provide all relevant context.

Based on my own experience and the industry news, it’s not a great time to look for engineering management work. But, based on a sample size of one, it’s a good time for engineering leaders/managers to ride the EM/IC pendulum back to IC work, with a leadership twist.

I’ve been calling this “engineering leadership, the good parts”. More of the activities that produce software, for people, amongst teams, less of the activities that allow software teams to exist within larger organizations and business hierarchies. Pairing those skills with teams of people and coding agent collaborators is pretty potent these days.

Pro-tip: if you’re an IC who is really enjoying this agent stuff, you could be a future engineering leader!

Processes should serve outcomes, not the other way ‘round

In moments where process overwhelms a team’s ability to get stuff done, I’ve been fond of saying we’re not here to:

- Push Trello cards around.

- Guess if a coding task is more like a small t-shirt or a large burrito.

- Arrange our git commits in just the right order.

- Write long-lived documentation.

I’d suggest that, instead, we’re here to ship code.

Now that writing code isn’t the tricky part, I’m more convinced than ever we are both not here to operate a process and that activities like these are more important.

I never claimed my aphorisms were consistent or even logical!

Somehow, it was only within the last month that I stumbled upon a better saying. We’re not here to write code, operate processes, recite our statuses in meetings, etc.; we’re in the software development game to make outcomes. In particular, the outcomes you might expect of a software-technology company, if you’ll pardon some light hype-y jargon. Shipping code is a small part of that, but it’s in service of fixing problems, improving efficiency, solving new problems for customers, or putting an invention into the world.

I was going to include checklists in the list of things we’re not here to do. But, checklists are a pretty great way of thinking, and often lead to their intended outcomes. When they don’t lead to those outcomes, it’s typically easy to look at the items in the list and see why! “Oh, we skipped this safety check and things went sideways” or “we didn’t do the user research and, predictably, users weren’t sure what this feature is for.”

By contrast, documents, processes, and quality gates typically obscure your organization’s intended outcome. They’re too frequently a trade-off to prevent getting burnt in the same way as before. Which is probably why they correlate so frequently with failing to hit or losing track of the positive outcomes that matter.

“We’re here to learn” is a good proxy for “we’re here to generate outcomes”. If a checklist (or process or document, if you must) helps you learn more about customers/markets/your design/the world/etc. faster, it’s probably a good thing for you. If it prevents success and failure in equal probability density, it’s likely overhead.

I (was) back at Destiny 2, since they’ve fixed many of the daily gameplay loops and put Star Wars in there. (Not even the most bonkers statement of 2026, somehow.) The odd thing about Bungie nailing the landing of a ten-year video game saga is that years 11 and 12 leave me wondering if I now is the time to bow out, even if years 19 and 20 prove as solid as years 9 and 10.

I took up autocross over the summer, enjoyed the challenge and community therein. I am a pretty slow driver, but it’s the best way to enjoy a sports car I’ve yet found. There’s always a few seconds to find!

Since the season is over here in the cold and rainy northwest, Gran Turismo 7 is filling in. Again, I’m not the fastest, but the practice-like activity ain’t bad. Again, there’s always seconds to find. The “let’s do longer races” add-on released in November to Gran Turismo 7 is pretty good too. But, as of lately, I’m trying to do stuff other than video games, so the PlayStation stands idle.

Recently good shows: The Lowdown, Alien: Earth, Pluribus. Currently, re-watching Better Call Saul. I don’t really like the Chuck and Jimmy arc, but the Jimmy becomes Saul arc is one of my favorite out there.

Bobcat, a document editor prototype

Recreating the Canon Cat document interface really lived in my head for a few days there. To such an extent, I’ve been building prototypes based on the idea and exploring the adjacent space.

Thus, my remix of the idea, Bobcat. She may not look like much, but she’s got it where it counts. I’ve added some special modifications myself. 🤓

It’s got a big text field, forward/backward buttons, and a tiny text field for changing the text marker to navigate with. Not quite as sophisticated as Canon Cat, but it’s a start.

Most of this was vibe engineered. I sketched out the first iteration with my pretty limited, but workable, SwiftUI chops. From there, almost all the document-centric app code, was a Claude Code thing.

I feel a little awkward about that. I wish I were “brain coding” more, but the whole point here is to experiment in the material – Swift UI and document editors. Working with an agent gets me there faster, so I go with it. Per delegate the work, not the thinking, I’m exploring ideas that would take me weeks or months to figure out if I was writing SwiftUI on my own. If the ideas bear fruit, I’ll pick up SwiftUI as I go. If not, more of my brain is available for whatever ideas do stick.

What’s next for this prototype? Keep tinkering with building macOS things, explore more ideas like scripting and data models, and lots more iteration.🤞

It’s the end-game of typing code as we know it

And Thorsten Ball feels fine. Joy & Curiosity #68:

2025 was the year in which I deeply reconsidered my relationship to programming. In previous years I had the occasional “should I become a Lisp guy?”, sure, but not the “do I even like typing out code?” from last year.

…

And the sadness went away when I found my answer to that question. Learning new things, making computers do things, making computers do things in new and fascinating and previously thought impossible ways, sharing what I built, sharing excitement, learning from others, understanding more of the world by putting something and myself out there and seeing how it resonates — that, I realized, is what actually makes me get up in the morning, not the typing, and all of that is still there.

I’m still adapting to this, having previously identified as a “Ruby guy”, “a Vim enthusiast”, and “a TDD devotee”. A couple of times a day, I find myself executing the “careful, incremental steps” tactics that scarcity of code demanded. And then I wonder, what’s the corresponding move for abundance of code? (I think 2026 is, for me, likely about exploring and finding that answer.)

I learned from a couple turns as an engineering manager that it’s the problem-solving, technical and social, that really excites me. If using coding agents means I code and think about the particulars of software development less, in exchange for writing and thinking more about what’s possible with software and teams, that’s a fine trade-off.

What if we don’t have to worry about how often someone or something would have to redo a contribution? What if we don’t have to worry about in which order they produced which lines and can change that? We’ve always treated auto-generated code different from typed-out code, is now the time to treat agent-generated PRs and commits different? What would tooling look like then?

I’m with Simon Willison here: what we produce now as software developers is trust-worthy changes/contributions that do the thing we say they do and don’t break all the other things.

Most teams are over-indexed on “produce code” and short on “make sure we didn’t break other things” part. I suspect, for a few months/years, we’re going to see lots of (weird) ideas about how to shift from scarcity of code to scarcity of verification. I’m not sure that coding agents can help us slop/generate our way out of that, at least not in January 2026.

Joining a new crew amidst a sea change

I joined a new team as a staff software engineer a couple of months ago, huzzah! Some reflections:

I’m writing TypeScript and Python, after many years of Ruby with a touch of JavaScript. Types are, after about 25 years of software development, part of my coding routine. They’re not so bad these days. It finally feels like types are more helpful than a hindrance.

Thankfully, this code is not too long in the tooth, so the dev loop is fast and amenable to quality-of-life improvements. But, it’s a big, new-to-me codebase and a deep domain model. I wouldn’t get much done were it not for coding agents, their ability to help me discover new parts of the code, and having spent much of the past several months practicing at steering them. So all that Claude Code experimentation was time well spent!

The team uses Cursor. I gave it a try and am mostly onboard with it. I still think JetBrains tools are more thoughtfully built than VS Code and its forks. But, Cursor’s agent/model integration is pretty dang sweet, I can’t argue with that.

And, Cursor’s Composer-1 model is fast and sufficiently capable. I think there’s something to be said for faster models that can work interactively with a human-in-the-loop. Cooperating with the model more like the navigator in a pair programming situation than a senior developer vetting the work of a junior developer. It feels like OpenAI/Anthropic/etc. really want to show off how long their models can work unattended. But I think that only magnifies the problem of reviewing a giant pile of agent-generated code at the end.

I suspect this is going to change our assumptions about tradeoffs on ambition and risk. If we’re lucky, we can replace a lot of soon-to-be-legacy process and ceremony that was about hedging and had nothing to do with the quality of product or code at all.

I wonder: besides wishing code into existence, which costly things are now cheap-enough? Agents reviewing agent-generated code doesn’t work that well yet. But, agents can tackle refactors, documentation writing, and bespoke tool creation. All the things we’ve previously been forced to squeeze into the time between projects. The cobbler’s children can now have pretty good shoes.

The hand we dealt

My parents (and their parents) world seemed more stable, somewhat knowable. (It probably wasn’t.) Even if your situation was low agency, if you did what you were supposed to (the life script) long enough, then things probably ended up alright. Scarcity before, abundance later.

My generation (millennial or X) and the ones adjacent came along and accelerated things. Stability declined as dynamism and knowability were within easier grasp, yielding greater individual agency. But now change was the only constant. More folks did what they wanted to and got by. But playing the life script, doing what you were supposed to do, was still pretty effective.

Jury’s out whether that will collapse just as these generations retire.

The next generation are going to change the balance too. What we gave them, in terms of technology and culture and politics and the environment, could make for wild, weird times. I don’t think we should besmirch them too much, if possible, for what they do with it. If anything, we should find harmony with how they played the hand we dealt.

Three great clarinet-adjacent sentences

I DID NOT KNOW what was going to come from Angela’s clarinet. No one could have imagined what was going to come from there. I expected something pathological, but I did not expect the depth, the violence, and the almost intolerable beauty of the disease.

– Kurt Vonnegut, Cat’s Cradle

Coding for the sake of typing in code

It’s not gone, but it’s on the way out. Brain coding is now: modeling, system design, collaboration, and problem solving. And increasingly: context switching, mentoring and coaching, delegation, execution, continuous learning.

Spicy but, with some reservations, I agree:

I believe the next year will show that the role of the traditional software engineer is dead. If you got into this career because you love writing lines of code, I have some bad news for you: it’s over. The machines will be writing most of the code from here on out. Although there is some artisanal stuff that will remain in the realm of hand written code, it will be deeply in the minority of what gets produced.

– Paul Dix

If you ever had “artisan” or “craftsperson” near “code” in your identity, then buckle up. You may find the next years challenging and un-fun. (I had “Expert Typist” for quite some time in mine. Ouch.)

…virtuosity can only be achieved when the audience can perceive the risks being taken by the performer.

A DJ that walks on stage and hits play is not likely to be perceived as a virtuoso. While a pianist who is able to place their fingers perfectly among a minefield of clearly visible wrong keys is without question a virtuoso. I think this idea carries over to sports as well and can partially explain the decline of many previously popular sports and the rise of video game streaming. We watch the things that we have personally experienced as being difficult. That is essential context to appreciate a performance.

– Drew Breunig, A Comedy Writer on How AI Changes Her Field

There is a certain virtuosity in someone who can put a lot of code out there quickly. But, only in the sense that they can “avoid all the wrong keys”, taking the most direct path to solving the problem or learning more about the domain. Navigating false paths, writing the right code for the moment, no more or less than neeeded, is the thing. Whether it’s an agent or a human, pumping out tons of code that requires thorough review for style and purpose is no longer the game.

I suspect this means that writing, film-making, and music are unlikely to fall victim to generative AI. At least, in the scenarios where art is the draw. Ambient music of all kinds, for airports or beer commercials or in-group signaling (looking at you, save a horse/ride a cowboy) are all probably going drastically change due to generative AI. The same for writing that isn’t trying to say something and moving images that exist only to fill in the time.

Perhaps, more than ever, data is the asset. We could delete a lot of the code as long as the data remains accessible and operations continue. 🤔