2024

Reading well in late 2024

It was a good year of reading, for me. I read some big books. I got more insight out of the books I read. Furthermore, I read things I don’t normally read, like philosophy and books about octopuses that help some troubled folks find their way in life.

A few things worked well for me, so here I am sharing them.

Bring a (computer) assistant

I used ChatGPT and Claude to ask questions about tricky passages in non-fiction and philosophy books. This kept me “in the text” more. I was less distracted by wondering what some term meant or the context of a historical reference. LLMs are great for asking questions about or summarizing passages, expanding upon interesting ideas, and getting historical context.

The “world knowledge” trained into these contemporary LLMs is sufficient to answer most questions without the need to provide extra context. I haven’t found the need to fiddle with trying to load the text of books I’m reading into an LLM to get it to answer coherently.

As ever, trust but verify anything a computer masquerading as a human tells you.

Read actively

Laid back on the couch, propped up on a comfy pillow, bathed in natural light (not-too-bright, not-too-gloomy), and just the right temperature. This sounds like the portrait of an ideal reading situation.

But, I’ve found that reading challenging books, and getting more out of them, requires a more upright and active posture. Holding the book open on at a desk with one hand with a pen or highlighter at the ready in another is the ideal for challenging books. Hands-on, it’s important.

Highlighting, summarizing, annotating, and commenting on interesting passages keeps my mind in the game for longer. I’m more likely to return to the ideas and get something out of them. I often combine this with my 🤖assistant to summarize dense passages, ask questions about something I think I’m missing, or to expand on words/ideas I don’t already know.

Always Be Choosing (Good Books)

It does no good to read better if I’m reading things that I don’t enjoy or learn from.

I regularly parallelize my reading across a few genres, at least fiction and non-fiction. At times, comics, philosophy, or technical books. At other times, I’ve tried to cluster a few books in the same topical area and enjoyed it. Fiction is the least parallelizable genre, in my experience. It’s tricky to keep multiple fictional storylines/characters in my head, in the same way that holding the button mappings for multiple games in my head is tricky.

I have enjoyed doing Commonplace Philosophy read-alongs. I’m getting a lot of out of the Arendt read-along (a very challenging text, for me), the Aristotle Ethics one was insightful, and I’m really looking forward to the one on The Dispossessed. So, “philosophical sci-fi” will probably figure highly in my 2025 reading choices.

Clustering around a topic, another idea I picked up from Tyler Cowen, is fun when I find a few books stacked up around a topic. That said, given I choose to read tome-like non-fiction in particular, having three non-fiction books in progress at one time is not often workable.

The flip-side of selecting good material is changing my mind. I’m still learning to stop when the material isn’t meeting my expectations. Not only should I throw more books, I should consider aggressively skimming/summarizing-via-assistant/copilot when books turn out to be too thin or simply not the right thing.

You (still) can’t beat a dumb-old Kindle

Reading on a Kindle is more pleasant than an iPad.

Despite better functionality on iPad for active reading, it’s way easier to read on a Kindle device. It’s partly down to size and partly down to lack of functionality, as ever.

You can’t underestimate the benefit of reading speed and the difficulty of distracting yourself when reading on a Kindle. 🤷♂️

That said, the Kindle app on tablets does have a few things going for it, and I hope someday these things will come to a reasonably distraction-free Kindle device, someday:

- “Read it to me”, not audiobooks, just plain old text-to-speech voices, makes some kinds of reading easier to sustain because there’s a voice to focus on and keep up with.

- Adding notes and connecting dots to highlights becomes a “secondary session” activity when reading on Kindle alone. Ideally, that kind of entry is easier on a Kindle device. I didn’t realize there was magic in the iOS keyboard implementation until I tried to type quickly on a simplistic Kindle. Whatever Apple has implemented there, Amazon should pick up on it.

A wishlist for 2025

My kingdom for short, dense non-fiction instead of short non-fiction that is merely a longer magazine article restated several times.

Comic-style “primers” on deep topics like Sartre and chaos theory are my favorite way to start on big ideas. I hope more complicated topics and wicked problems will get this treatment in 2025, and that I’ll discover said primers.

I’d definitely kick the tires on an interesting, slightly better integrated way to read long-form, i.e., books with an LLM-powered assistant at-hand. The bar is low here; I’m copying and pasting between apps to make this work right now.

Maybe Amazon, Remarkable, Boox, or even Apple will make the reader/hardware situation more exciting in 2025!

Reading with a computer assistant (an LLM) to answer questions, summarize dense paragraphs, and expand on ideas has been one of the ways I leveled up at reading over the back half of the year.

You don’t have to upload any book into the system. The Great Cosmic Mind is smarter than most of the books you could jam into the context window. Just start asking questions. The core intuition is simply that you should be asking more questions. And now you have someone/something to ask!

— Tyler Cowen, How to read a book using o1

Extremely true from @tylercowen. Not just for books, but for almost everything. Music, movies, Netflix documentaries, essays, even Twitter threads, codebases and strategy docs, PRDs, the marginal benefit to asking more questions has increased dramatically even as the cost fell.

As ever, trust but verify you’re not incorporating an AI hallucination into your human brain.

The collected wisdoms of Calvin Fischoder (voiced by Kevin Kline) from Bob’s Burgers:

A bet is a bet, Bob. I once lost thirty thousand dollars on a horse. She just ran off with it.

– “The Kids Run the Restaurant”

I lost the year 1996 to schnapps. I still don’t know what the Macarena is. D-Don’t tell me. I’ll figure it out.

– “Eggs for Days”

It smells weird everywhere, sir. That’s how you know you’re alive.

– “The Taking of Funtime One Two Three”

And, in song form: Nothing makes me happy, The Spirits of Christmas.

A low-road LLM prototype, with FastHTML

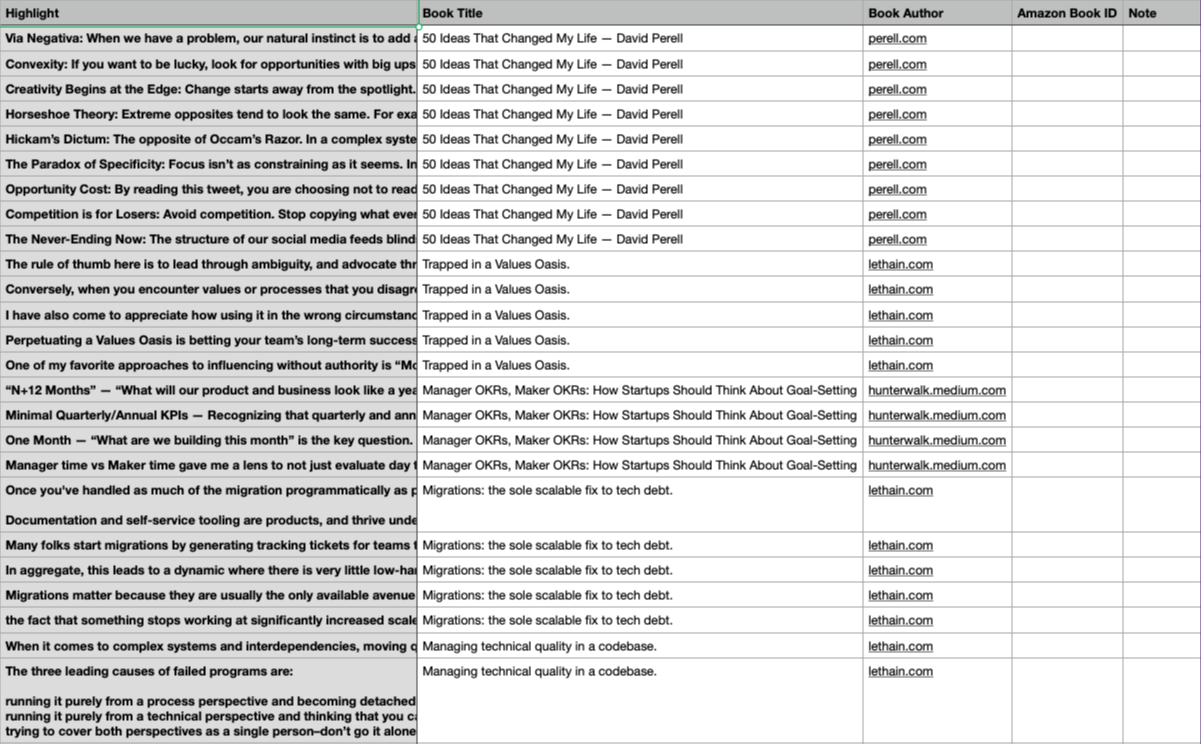

I wanted to tinker with llm and “AI engineer” up a humble tool for working with my notes, writings, highlights, etc. Emphasis on humble: if I could tinker with any one of those datasets quickly and iteratively, I would mark it as a win. (Previously, Scaling down native dev.) Medium story short, I exported some of my reading highlights, loaded it into a database, and wrapped a quick UI around it to navigate my highlights via embeddings rather than keyword search. A few short sessions later, maybe a few hours total, I had something interesting working. 💪🏻

1. Data

For several years, I’ve used Readwise to capture highlights and search/review them later. Handily, Readwise has CSV (and tree-of-Markdown files) exports of all one’s highlights. As data formats go, CSV is nearly perfect for this exercise. It’s structured, so parsing is not required to shoehorn it into a low-ceremony data pipeline. That said, Readwise’s export isn’t perfect: notably it lacks URLs the highlights started with. But for experiments, it’s a start.

2. Pipeline

Thanks to sqlite-utils, I can generate a SQLite database from the export CSV. This all happens by convention with the sqlite-utils command line.

db:

sqlite-utils insert \

highlights.db \

highlights \

data/readwise-data-2.csv \

--csv \

--pk id

No bespoke (Python) code is required in this whole pipeline process. I ended up encapsulating the steps in a Justfile, but anything that can run shell commands would have done the trick.

Step two is to generate embeddings. With the right install, this is another one-liner, this time with llm. We query the database generated in the previous step to give us content to pass through to a text embedding model. The generated vectors are stored into the same SQLite database.

embed:

llm embed-multi \

docs \

--database highlights.db \

--store \

--sql 'select id, highlights."Book Title" as title, highlights."Highlight" as content from highlights' \

--model mpnet

There is no step three! The data is ready to query for “semantic”, not keyword, similarity search. To prove to myself I had an end-to-end working proof of concept, I used this snippet to query the data:

# $ just query "now is not the time for notes"

query q:

llm similar \

docs \

--database highlights.db \

--content '{{q}}'

3. Prototype

Next, I put it all together in a FastHTML app. This was fun! FastHTML is great for this kind of bespoke, informal hacking. Caveat, some of this may not be idiomatic Python. I’m just coming back to Python after not having written it in seriousness for twenty years.

- Using the

dataclassdecorator on a class and a method for converting an object to a “FastTag”, love this for low-ceremony but typed data modeling. - I finally got the grasp of Python’s generator syntax. I want to like

itertools, but it’s a pain to use. (🌶️ Ruby’sEnumerableremains undefeated.) - JSX-like syntax to structure UI in code is great. This approach falls down every time I put a designer in front of an editor, but I’m going to call it: this is the mark-up/UI-construction ergonomics developers crave.

- There’s no need for a layered web-app approach here. There’s a

similarfunction that turns query text into similar highlight “model” objects. That’s a nice affordance for working quickly and loosely.

Conclusions

Python’s has pleasant things going on lately. Even a career Ruby developer like myself can find kind things to say. 😉

The trickiest part to building with LLMs and generative AI is source data. Prompting a model as-is is not much more interesting than using ChatGPT or Claude. Ergo: look to one’s notes, posts, highlights, etc. to find compelling data to build upon.

Variations on RAG (this is probably a bit short of calling itself RAG, but it’s very close) produce more appealing results than keyword indexing, but not by much. I don’t think it’s the center of a product yet; more of a sidebar or enhancement.

All of this took less than five hours to build. I’ll probably end up spending a couple of hours total writing it up. This is the kind of “working with the garage door up” kind of thing I want to do. 💯📈

Currently reading

- 🐙 Van Pelt, Remarkably Bright Creatures: on an octopus and a widow. A recommendation from my sister. I could go with more octopus narration.

- 🕰️ Burkeman, Four Thousand Weeks: the thesis is that no amount of time management gives you infinite time, so you might as well take advantage of the present.

- 🧐 Arendt / The Human Condition: reading along with Commonplace Philosophy.

- ⏸️ Holiday/Right Thing, Right Now: on morals, through the lens of many historical tales and Stoicism.

- ⏸️ Lichtenberg/The Waste Books: turns out, witty one-liners and reflections on life look about the same in notebooks from the 18th century as they do on modern social media. 😅

Ideas stuck in my head

Is there really a way to push back on the complexity of the web? — Tom MacWright on the plight of modern, full-stack software development.

On loving your fate, how to handle pressure, and the value of being proactive yet positive – James Clear on tackling the challenges of life.

You don’t need Scrum. You just need to do Kanban right. — Lucas F. Costa on how Scrum is a hobbled version of Kanban, if you squint right.

The Trouble with Tools. Daniel Miller on notes, tasks, projects, and the overlapping tension of using software to manage them.

It remains very true that all software expands to the point that it can host checklists. I try not to worry too much when a task list emerges from a note. If I can keep that task list short-lived, i.e., finish it within a working session, it’s not a problem.

I continue to wish Things would do their very well-considered thing and make notes a first-class concept in their app. But only if they can solve it with their consistently high quality of thoughtfulness and execution. Until then, I’m thrilled with them not bolting it on at all.

Lately, I’m choosing my apps for simplicity of general-purpose use. Customization and scriptability are great on paper. But then I fiddle too much instead of getting stuff done.

That said, I have three different notes-shaped apps in my daily workflow. I’m basically fine with this! Maybe I’m done with all-knowing, open-ended, monolithic apps for Managing All the Work. 🤞

Bruce Springsteen’s “No Nukes” concert is one of the better early period surveys of his work. But what I’m really after, lately, is a middle-period summary of his discography. A live album highlighting the material from Born in the USA through The Rising. Two very different peaks in his career, with some of this most unique albums in between.

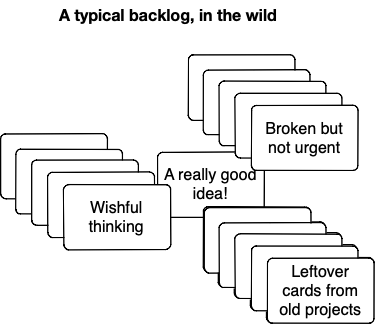

Backlogs

Can’t live with ‘em, can’t live without ‘em🤷♂️

A product team (at least the ones that have been working for more than three weeks) has a backlog of work they’d love to tackle, but now’s not the right time. Maybe it’s not the most important, a practical solution isn’t in-hand, the technology isn’t ready yet, or a dependency hasn’t started work yet. Either way: a growing, somewhat discouraging list of things they want to do but can’t yet.

Over time, a backlog evolves to many forms. None of them are ideal. We have to look at backlogs for what they are: an artifact of time’s finite-ness and our finite ability to do work in the present.

A “big pile of sadness backlog” doesn’t acknowledge limitations in time and capacity. It imagines a future where we can do everything we’ve ever wanted. The backlog actually gets shorter. All those problems we once wanted to solve, we can. We are also a bit taller, more attractive, and feel a bit younger while we’re at it.

But that day never comes, and we’re left with a sad backlog of things. Almost (and this is the nefarious part) all of them less important than what we’re currently doing, but still, we think, important. So it stares at us from the shadows, a bit sad and discouraging us that we are failing at our job by having this large backlog of ideas and promising projects. At least we think they’re promising! More likely, in my experience, attempting to triage the pile of sadness backlog yield two more meta-projects around organizing the sadness to highlight the truly sad from the merely unfortunate. 😮💨

It’s more helpful to look at a backlog as numerous maybe-good ideas sent to our future-selves. By definition, a backlog is speculation. We thought executing on these projects and tasks would improve things, but we won’t know until we do. Schrödinger’s cat and all that. Storing all that speculation away in a pile is healthy. If it turns out one of these ideas is the right starting point for the next most important problem we face, we’ll pull it out of the backlog and give it a think. Otherwise, it stays there, putting no current work at risk.

The contents of a backlog are frozen in time. The tasks, projects, docs, designs, screenshots, diagrams, etc. all reflect a point in time. Some call this rot, implying “hey, we should have been better about keeping this campsite clean and used our (infinite 😜) time to tend it”. IMO that’s not the best approach; better to regard aging tasks as issues and solutions from a point of time. They may no longer reflect the circumstances the product and team currently face. Items in a backlog necessarily need updating by a PM or developer to figure out if they still make sense, would solve the problem, or are a direct solution to that problem.

I have quite the extended personal backlog of items to read and project ideas. When I regard them as missed opportunities, as evidence that I could have been at least a little more diligent in managing my time, it’s stressful and discouraging. When I instead regard it as a pile of ideas in reserve for mornings I wake up and none of my current hobby projects are enticing or what I’m reading isn’t doing it for me, then those backlogs are useful. Granted, said lists aren’t getting much shorter.

Perhaps, a backlog is a defense mechanism. They are, at times, a way to deliver a soft no, to ourselves or collaborators. “Not a bad idea, but not something we can get to right away. It’s in the backlog. Maybe someday we’ll come upon it.”

Or, you may have an impeccable backlog! All killer, no filler; like a “no-skips” album, but in product backlog form. If you’re out there, please let us know how you got here and how much effort it takes. 😆 (Really, if you’re out there, I’m intrigued!)

The flip side is no backlog at all. The project work is a river and if something interesting floats past, you seize upon it. Otherwise, you let it float downstream and don’t worry about it too much. Or, there’s no team backlog, but the team members keep a list of ideas/task/projects that might be interesting in the future and bring them up at opportune moments. That works too!

I’m reading Four Thousand Weeks. Thus, my mind is often on finitude, “the state of having limits or bounds”.

Bear with me as I consider tactics for time scarcity that aren’t “try harder and use this method to organize tasks in this way that worked for one person a while back” 🙃

Managers and coding: “it depends”, but go for it anyway

🌶️ Hot-take: if you’re a manager and find you1 miss building…then build2!

- On your time: mornings, weekends, national holidays.

- Whatever you want: tools for yourself, something frivolous, something practical.

- However you want: in an esoteric technology, in a boring technology, in a domain you’ve always wanted to explore, with LLM copilots, building every piece from scratch, without using conditionals, whatever!

Avoid:

- Swooping into your team’s current projects3.

- Imposing your discoveries/ideas on unrelated code reviews.

- Inventing projects and putting yourself on the critical path.

- Building and handing off a proof-of-concept to an unsuspecting teammate to finish.

- Thinking the grass is greener on the hands-on/IC side.

Don’t react to the bad parts of your previous role. It’s increasingly tricky to ride the pendulum between IC and leadership roles. You may only get to pull that lever once. Maybe building something for yourself will temper the temptation to go back to an IC role.

Instead, see if you can scratch whatever hands-on building/coding/designing itch you have in your time. You’ll necessarily have to scale it down. Honestly, that’s a great constraint! If you tried to build something big, you’d have to lead and manage it and, if you recall, that’s what we’re taking time away from in the first place here.

Follow your curiosity. The very best part about building on your time as a manager is you don’t have to make great decisions. Whatever is of interest, go with it.

Want to build a compiler, but you work in web apps? Go for it. Want to build a game, but you work in compilers? Go for it. Want to build an asset tracking web app in a really particular way, but you work in games? Go for it. Want to write in Haskell, but you work in Ruby? Go for it.4

Go for it.

-

As always, when I say “you”, I mean “you, the reader”, which is often “my previous or future self”. 🙃 ↩︎

-

Previously: Managers can code on whatever keeps them off the critical path . ↩︎

-

Don’t get yourself back on the critical path of projects. Your time and energy operate within different constraints now, and they’re largely incompatible with being deep and hands-on with the code. ↩︎

-

Caveat: you are about to have a lot of arguments with a new nemesis, the compiler. ↩︎

We cannot truly know whether we are not at this moment sitting in a madhouse. (Georg Christoph Lichtenberg, The Waste Books)

An astounding percentage of Lichtenberg’s quotables are just as relevant today as they were in 1789 when they were written. What’s more 2024 than this particular one?! 🫠

Granted, House Atreides did miss some milestones

A couple of lessons on leadership in Herbert’s Dune:

“Give as few orders as possible,” his father had told him…once…long ago. “Once you’ve given orders on a subject, you must always give orders on that subject.”

Avoid, whenever possible, making decisions for people and teams. It may end up discouraging or preventing them from making any decisions for themselves in the future.

Frequent top-down decisions are the autonomy-killer, they might say. 🤓

Hawat arose, glancing around the room as though seeking support. He turned away, led the procession out of the room. The others moved hurriedly, scraping their chairs on the floor, balling up in little knots of confusion. It ended up in confusion, Paul thought, staring at the backs of the last men to leave. Always before, Staff had ended on an incisive air. This meeting had just seemed to trickle out, worn down by its own inadequacies, and with an argument to top it off.

This is what bad meetings feel like; they deflate like a balloon. Worse than indecision, they bring confusion about how to proceed, where efforts really stand, or if the current approach is the right approach. Not necessarily because of debate or disagreement. But, the lack of consensus or direction certainly doesn’t help.

Lists for the past, lists for the future

Reader, I want to (once again) talk to you about the life-changing power of making a list and putting it in order.

Ambiguity of like, “what are we gonna build?” or “how are we supposed to build this?” Or was it supposed to be A or B or why did we make this decision? So having the checklist of “this is the thing,” it is a very, very cheap way to eliminate most of the ambiguity.

– Kristján Pétursson, Human Skills 018 — Creating Clarity From Ambiguity

Tasks lists are great for capturing and organizing what you want/need to do. They are sometimes good for thinking through how to execute on those tasks and projects.

Ordinarily, I’m too focused on all those incomplete items The empty check boxes, the lines that aren’t crossed through. So much potential. It’s exciting and overwhelming!

I rarely use my task list to reflect upon what I’ve done. To retrospect on how much I accomplished any given day or capture a post-hoc note or two on follow-ups, what worked, or to merely pat myself on the back for a day of honest work.

So I’m trying this right now! But, applications could stand to add more affordances for looking back in time too. This goes for applications like Things as much as it goes for Jira, etc. It’s probably our work and hustle culture. 🤷♂️ Always looking forward and rarely slowing down to say “hey, I did this, let’s get excited about that!”

One of the nice things about Kanban boards is you eventually end up with a giant pile of things that were done, and that’s a nice way to feel the momentum building that a bunch of stuff got done. Let’s get more of that.

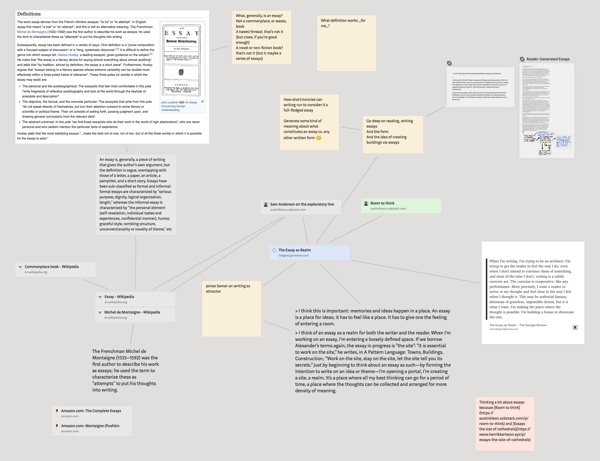

Thinking a bit about essays because Room to think and Essays the size of cathedrals.

Team sizes & breakpoints

“Dunbar’s number is a suggested cognitive limit to the number of people with whom one can maintain stable social relationships—relationships in which an individual knows who each person is and how each person relates to every other person”.

Corollary: any group size approaching 150 people (Dunbar’s number) includes at least one person who knows about Dunbar’s number.

To paraphrase a classic joke, “How do you know if someone knows about Dunbar’s number? They’ll tell you!” I’m telling you, right now, I know about Dunbar’s number. 🙃

Practically, I think this implies:

- If you’re designing an organization close to but not exceeding Dunbar’s number, you can hand-wave the details of when it grows to exceed Dunbar’s number. Someone will enthusiastically let you know that the organization has exceeded Dunbar’s number and might need reconsideration. 🥸

- For organizations as small as 10% of Dunbar’s number (15), there’s a pretty dang good chance that someone knows about Dunbar’s number. For organizations any multiple of that size, you don’t need to go around telling everyone about Dunbar’s number. Chance are, someone is telling everyone about Dunbar’s number. 😆

My experience in (software) teams is there are breakpoints far smaller than Dunbar’s number that matter even more.

| Team/org size | What changes? |

|---|---|

| 3 people | Working consensus/quorum is now a thing. |

| 6 | Super-linearity of personal lines of communication becomes noticeable. |

| 10 | Splitting into teams and managing communications along those lines makes sense. Congrats, you have invented hierarchy and management! |

| 25 | You may not talk to everyone in any given week. Organizing get-togethers takes more than a person-week of effort. |

| 50 | There are a few people who, despite best intentions, you don’t know they exist or what they do. |

| 100 | An old guard/new guard dynamic may form. |

| 150 | Dunbar’s number |

| 500 | You have one weekly ceremony/routine that is necessitated by the organization reaching some kind of IT scale/process. You daydream about the times when you didn’t have to do this every single week. |

| 1500 | All-hands company get-togethers resemble full-blown conferences. |

| 15000 | There are so many possible “left doesn’t know what the right hand” is doing scenarios that it makes my head hurt. |

FWIW, these are all folk rules. I haven’t seen rigorous work that backs any of this up!

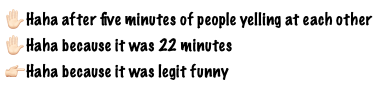

Kinda comedy

Permit me to throw shade at the Emmy Awards for a moment.

Reservation Dogs is a better kinda-comedy than The Bear. Dogs is consistently funny and finds ways to explore the lives, relationships, and histories of the characters in rewarding ways.

The Bear is equal parts endearing and yelling. Once or twice a season, it speaks to craft and intensity. Those are my favorite episodes. The Fak family is the only undeniably comedic element of The Bear. Give us a spin-off of that and let it legitimately win all comedy awards.

The Bear belongs in the drama category, but isn’t the length of a drama because no one could sustain watching an episode of that show for that long. 🙃

By kinda-comedy, I mean “22-minute shows that are eligible for comedy awards at the Emmy’s”. The real solution here is for the Emmy’s to fix their categories in two ways:

- Don’t call “dramatic” shows that are shorter than 44 minutes comedies. This is absurd and an easy fix.

- Unrelated to The Bear, currently: create separate categories for “returning shows” and “ending shows”. Emmy voters have a tendency to award shows in their last season disproportionate to their competition.

In short: Reservation Dogs was the comedy that Emmy voters think The Bear is. 🌶️

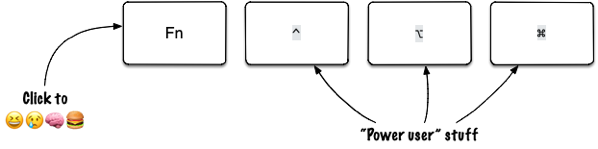

The emotion key

The function key, on iOS and possibly Mac keyboards, is bound1 to show the emoji picker.

Apple has chosen to bind a whole key, no modifiers or anything, to “convey an emotion”. A (cartoonish) picture is worth a thousand words.

🧠 Smart!

(I could go on for at least five minutes about Apple’s recent design advantage is in introducing big features without making them shout-y in the UI. Granted, the marketing is very shout-y.)

-

By default, if I recall correctly. ↩︎

Warm up your network before things get spooky

As I write this, it’s late-2024 and the job market is tough for jobseekers. If one finds a job within three months, they’re considered lucky. Some folks go 2–3 times longer in-between jobs. If you’re on this boat, break a leg!🫠

If you’re not actively seeking a job, here’s a thing that helped me go from “well this market sucks” to “welp, job seeking is my full-time job now, the only way out is through”:

I started warming up my professional network when things got spooky at my current job.

Caveat, I could have done even more than I did1. Despite not taking more steps, the simple act of thinking through “what would I do if the worst happened and my job disappeared?” put me one step ahead, and I was a little more calm after my job did disappear.

1. The network is the work2

A strong, active professional network is the most important tool in this scenario. This is true whether the job market favors job-seekers or employers.

Especially when seeking a job without one in-hand, people are happy to respond and help out3. Fifteen minutes of writing and responding to messages could kick-start the process for your next job.

Some helpful questions I asked myself in contemplating how to warm my network back up:

- Who would I work with (again)? If former colleagues were especially easy, awesome, or delightful to work with one, a second outing is worth pursuing.

- What kinds of places would I like to work at? I find myself particularly interested in finance-adjacent endeavors.4 Tooling is also of interest, anything that makes people’s work lives better resonates with me.

- Why not aim for the bleachers in my next role?5 If I could work anywhere or hold any kind of role, where would it be? Perhaps there’s a role out there with all the upsides I’m looking for and very few trade-offs on the downside. Who could connect me to those roles?

2. Take a stroll down accomplishments lane

Another easy thing to do6 is get (back) in the resumé writing mindset. That is, the self-promotion mindset where you’re talking about all the things you’ve done, how great they were, putting numbers to how much they improved customer outcomes, and generally putting maximum shine on your work.

This is the point where I wish I had kept a good brag doc.

In lieu of a record kept in the moment, I took out some stickies and wrote out what I’d done in each of the last 6+ years at work. The goal here was to shake the dust from my brain and remember all the cool projects we shipped, excellent people I’d worked with, and improvements I’d made across the board. Keep in mind, no feat is too small and the sequence of projects doesn’t matter much! I’m only getting in the groove here. The two-ish page limit on practical resumés is forces compressing this list later.

Don’t feel bad about taking time to do this. At any high-functioning organization with a performance management cycle, what you’re doing here looks exactly like what you’d do for your regular performance review ceremony.7 Two birds, one stone!

2a. There must be 50 ways to write your resumé

An updated resumé is a pre-requisite. I’m not (yet!) so internet-famous that I can get by without one. I didn’t start rewriting my resumé right off the bat. Instead, I dusted it off and considered how I’d change it when the time came that I needed it.

Writing about oneself is challenging. It feels awkward, like something one is supposed to avoid. A few insights helped me here:

- My resumé is the marketing/advertising that will yield the job I hold for several years of my life. Multiplied out by annual pay, this document is a tool I’m using to generate the next several hundred thousand dollars that fund my life. Basically, any time I spend on it is worthwhile.

- Ergo, it’s worth spending days, all day, iterating, improving, and trying new things. It was this iteration that helped me get over the cringe of writing about myself.

- Cover letters seem to have fallen entirely out of fashion.8 Your resumé now has to do even more work. All the more reason to keep at iterating and customizing it.

- If it helps, write your resumé like you’ve already landed the hiring manager interview.9 Convince the reader that they were right to pull your resumé out of the stack. Tell them about all the great stuff you’ve done. This is where you want to refer to all your accomplishments, emphasizing the most recent and those most relevant to the kind of role you’re seeking.

3. One job is plenty

Looking for a new job is an exhausting endeavor. Iterating on resumés, tweaking LinkedIn profiles, and updating online presence consumes a lot of time and emotional energy. That’s not to mention actually finding promising jobs, customizing your resumé to suit the job descriptions, filling in application tracking system forms, responding to leads, and scheduling of interviews. Phew!

If you can avoid it, don’t seek a new job whilst trying to hold down another one. Especially one that has “gone spooky”. Granted, there’s a lot of luck and privilege wrapped up in that advice!

If your luck affords it, make the search for one’s next full-time job a full-time job. That is, give yourself a few month sabbatical to recharge and focus on the job search. Granted, don’t take this route in a market that heavily favors employers and make sure your savings are topped off in case of surprises.

All this said, preparing for seeking a job is not the same as seeking a job. It took me longer than I’d have liked to realize this. Actively seeking a job, for me, looks more like marketing and sales than building or leading. It’s playing a “numbers game”: the more people you network with and the more jobs you apply for, the better. Thinking through how I would execute that helped!

But there was a hump I had to get over, entirely in my head, about actively/explicitly putting myself out there. Some social barriers I had to let go of, like not “reaching out” to folks via email or LinkedIn. Doing sales-y things, it’s a big growth area for me. 🙃

Finally, a thing I’m learning about this most-difficult job search of my career: every step on the job search is one step closer to finding that job.10

If you find yourself actively seeking a job, or merely feeling the spider-sense tingle about your current role, good luck! I hope this helped.

-

I almost wrote this with “job-seeking is a steady state” as the thesis. I wish I had taken that idea more seriously before and hopefully I won’t let my guard all-the-way-down after I get through my current job search. ↩︎

-

Sun Microsystems is no longer a thing. Ergo, no apologies for paraphrasing their “the network is the computer” tagline. ↩︎

-

Basically everyone in my network whom I’ve reached out to was happy to help me through this challenging liminal state. This is a really nice thing to experience, particularly in our often-dismal times. ↩︎

-

I’m not certain if I would actually like working in finance. Maybe I’m mostly interested in learning more about that industry works, visibly and invisibly, in our lives and the world. ↩︎

-

I’m unlikely to chance my way into Disney Imagineering, but maybe there’s something a lot like Imagineering within reach (of my network).🤞🏻 ↩︎

-

I didn’t do this before the shoe(s) dropped, but I wish I had. ↩︎

-

No more than quarterly, no less than annually, I hope! ↩︎

-

I’m honestly not sure why. As a hiring manager, I find the cover letter crucial information in seeing if the candidate is capable of clear, concise, easy-to-read communication.

I’m going to blame applicant tracking systems and the reality that most job descriptions receive hundreds and thousands of applications. 🤷🏻♂️ ↩︎

-

Advice I heard for addressing an audience with confidence when I took improv classes: walk out there and address them like you’ve already won them over. Bring the charisma and energy of someone who has already sealed the deal. ↩︎

-

I was not taken by this bit of wisdom at first. But, it’s truth enough in that taking no steps to seek a job gets you nowhere. In context, that’s true enough to keep in mind. ↩︎

Bias towards hitting publish

Jeff Triplett, Please publish and share more:

You don’t have to change the world with every post. You might publish a quick thought or two that helps encourage someone else to try something new, listen to a new song, or binge-watch a new series.

…

Our posts are done when you say they are. You do not have to fret about sticking to landing and having a perfect conclusion. Your posts, like this post, are done after we stop writing.

I have found it’s not enough to journal, capture notes, save bookmarks, write in a notebook, post to a blog, participate in a cozy group chat, or engage in public discussion on social media.

Revisit what I wrote. Resurface old ideas. Try to put old ideas together with new ideas. Remixing ideas with new thoughts. Repackage into a new medium. That’s how the compounding of writing and thinking hits the road, per se. At least, for me.

Set activation energy low and steer into repetitiveness. I never know which combination of words is going to hit someone’s mental jackpot.

Iteration (take 7)

Kent beck, Coupling and Cohesion:

The only way to be able to describe something well is to describe it badly 100 times.

I’m forever feeling this one. Many times, I’ve felt the idea or plan is so clear in the document or the whiteboard. And yet, when other folks try to read the idea or execute the plan in their heads, the connection isn’t made. Back to the drawing board. 🙃

FWIW, “describe something well” is directly related to building software. A good domain model or intuitive UX interaction is as tricky to describe as a great movie or poem.

All problems are shallow under iteration; the real limitation is producing iterations and collecting feedback.

Hurry up and lose your first 50 games.

— Go proverb

Case in point: I’ve probably written some variation on this blog post nearly a dozen times. Perhaps some day, I’ll get it just right.