2018

It is I, who dwells at coffee shops, who sometimes reads paper books instead of a glass display, who prefers Apple Pay over card swiping when possible.

How I focus more and worry less about the internet

As a long time Rands fan, I highly recommend you partake of the Rands Information Practices and Rands Slack Protocol. Allow me to add some of my favorite tactics.

Get your browser tab situation under control; I get itchy when I have more than several tabs open! (But if you’re the sort who never has less than a few dozen tabs open, I still like you.) Move your most important and favorite blogs and websites into a feedreader. I like Newsblur plus Reeder macOS/Reeder iOS. You can even put Twitters and email subscriptions into Newsblur, which is some next level distraction management.

Learn the keyboard shortcuts. All of them. Dazzle people with your ability to dance across the keyboard and make computers do things. Bonus tip: picking up a mechanical keyboard will make you sound extra amazing but slightly annoy the people who sit close to you.

Customize your system to remove distractions, allow you to focus, reduce drag, and move faster. If other people sit down at your computer and can’t operate it, that’s okay. But, don’t customize it too much. I want to get stuff done, not produce the ultimate hot rod computer for hot rodding computers.

Speaking of hot rodding, do not go too deep on productivity systems. I need a notebook-like app to think in and write stuff down. I need some kind of souped up todo list. Not much else. They are outboard brains, augmenting my ability to function as an adult and as someone thinking about and/or making things. Its tempting to read about everyone else’s Extremely Awesome Productivity Setup, but they’re doing different stuff and have different responsibilities; it’s entertainment, not education.

I cannot read the entire internet. Get really good at guessing whether something that comes across your desk is going to better your understanding of the world. Skip all the clickbait and tribal rage stuff. I look for stuff that provides insight into how we got here or what the future might look like.

That said, don’t accidentally filter your dear friends out in the process of managing the information onslaught. Put them in a special list or feed folder and look at it daily. Engage with them like its 2008 and the internet is still a promising thing that connects us to our friends.

Finally, I highly recommend you follow Rands’ best bit of advice, which is curiously tucked into the last footnote: replace screens by your bed (as many as possible, at least) with a book.

On decision tables and conditionals

Over the years, I’ve heard a few times about something like Decision Tables (Hillel Wayne):

A decision table is a means of concisely representing branching and conditional computations. In the most basic form, you have some columns that represent the “inputs” as booleans and some columns that represent outputs and effects.

It was usually some variation of teams using something like a truth table to define the logic of their application without using a mess of conditionals. It also turned out that there was a compiler optimization where these tables could be laid out such that figuring out which behavior was appropriate to all the inputs was faster than conditionals would have been.

Wayne doesn’t mention anything like this. But, there is mention of using decision tables with an RSpec macro to verify code behavior with less boilerplate assertion logic. So that’s neat!

If I had to sum up my style of coding, I’d say probably a third of it is about reducing the time I spend reading or writing conditional code. That’s where most of the bugs and frustration are. Pushing them down into the compiler, runtime, or database is a fun exercise too.

My favorite question is “why?”

At some point in elementary or junior high school, we were taught all our essays should answer one of five questions: who, what, where, why, or how. These were, at the time, “the five W’s” of writing.

Why is my favorite because it provides context. Asking why usually gets me to the bottom of the situation. It often encapsulates the who/what/where/how/when. It’s the most open ended, which is probably the best part. Asking why almost always makes the next most important question obvious.

I still like the other ones too. When often yields interesting histories or chronologies. Who can lead to a nice bit of biographical or character background. How is great when I care most about progress over deep context. Where can give me a little bit of backstory about why a certain location or geography is important.

Asking why wraps up all of those. Why are shipping containers a standard size? Who decided it should be that way. How did that drastically change global shipping? When did this occur and what changes can we observe from it?

One word, one question. So much to learn!

The systemic sublime makes our world more legible

My new favorite category on Kottke.org is the systemic sublime, wherein our networked, often inscrutable world is made more legible. The connections between ideas and and their instantiations are not always obvious. The history, and often the path dependence, of how we got here make it a little easier to understand our world.

Other fine purveyors of system sublime include Alexis Madrigal, Matt Webb, Tom Armitage, Ribbonfarm, Steven Johnson, and Michael Lewis. My favorite thing about these authors is, they are answering “why?” by connecting the dots between “how”, “who”, and “what”. It’s my favorite kind of thinking!

Advocacy = empathy + speaking to someone else’s conceptual framework. When I’m trying to convey an idea from my head to someone else’s head, the biggest challenge is converting from my conceptual framework and values to theirs. Hence, The words that work:

For example, one partner in a conversation might use concepts like power and tradition and authority to make a case, while the other might rely on science, statistics or fairness. One person might argue with tons of emotional insight, while someone else might bring up studies and peer reviews.

Rare is the success of advocacy that doesn’t involve a lot of empathy, understanding your conversation partner, and letting go of the little details to reach a new local maximum of mutual understanding.

It's dangerous to go alone, take dotfiles

Yesterday I was handed a fresh, nifty new laptop. This is, for me, mildly terrifying. Last time I did a clean operating system install was seven years ago. I’ve carried an idiomatic mixtape of dotfiles, macOS preferences, files, and cruft with me on my personal laptop ever since.

A brand-new, stock laptop is a shock to my highly acclimated and particular system.

I started contemplating how exactly I could get setup relatively quickly. At the same time, I want to pay down a little bit of automation debt. By the next time I’m faced with this situation (when I buy my own computer, if a disk is struck by lightning, etc.) I shouldn’t feel so much like a deer in headlights.

At first I thought I’d attempt to transmogrify my current lightsaber into something like Gina Tripani’s dotfiles. I like how this is structured, and that the initial setup of apps and Unix-y things is bootstrapped by Homebrew. But, then I remembered Thoughtbot’s laptop and dotfiles and convinced myself this was the way to go.

Indeed, laptop helped me cut the Gordian knot of setting up my new machine so I can write code and feel at home on it. I highly recommend it if you have the means.

New dotfile repo forthcoming!

I’m starting a new job tomorrow. I decided to take a week off in-between jobs, mostly to make a quick trip to Disney Land.

I hid most social media apps away, stopped paying attention to news, and caught up on reading. I gave myself a 3-day weekend before our trip to decompress, we went to Disney Land for 3 days, and had a 3-day weekend to relax before I start the next thing. I’ve done a fair bit of writing, watching movies, tinkering with Ableton, and playing games too. A great vacation sandwiched between two stay-cations, in the lexicon of our times.

My mind feels like it’s had a chance to reset and get back to a neutral state. I’m hoping this will help me keep my frame-of-mind looking forward as I start the next job. This was a great decision and I highly recommend you do something like this (granted, Disney Land isn’t everyone’s thing) yourself, if you have the means.

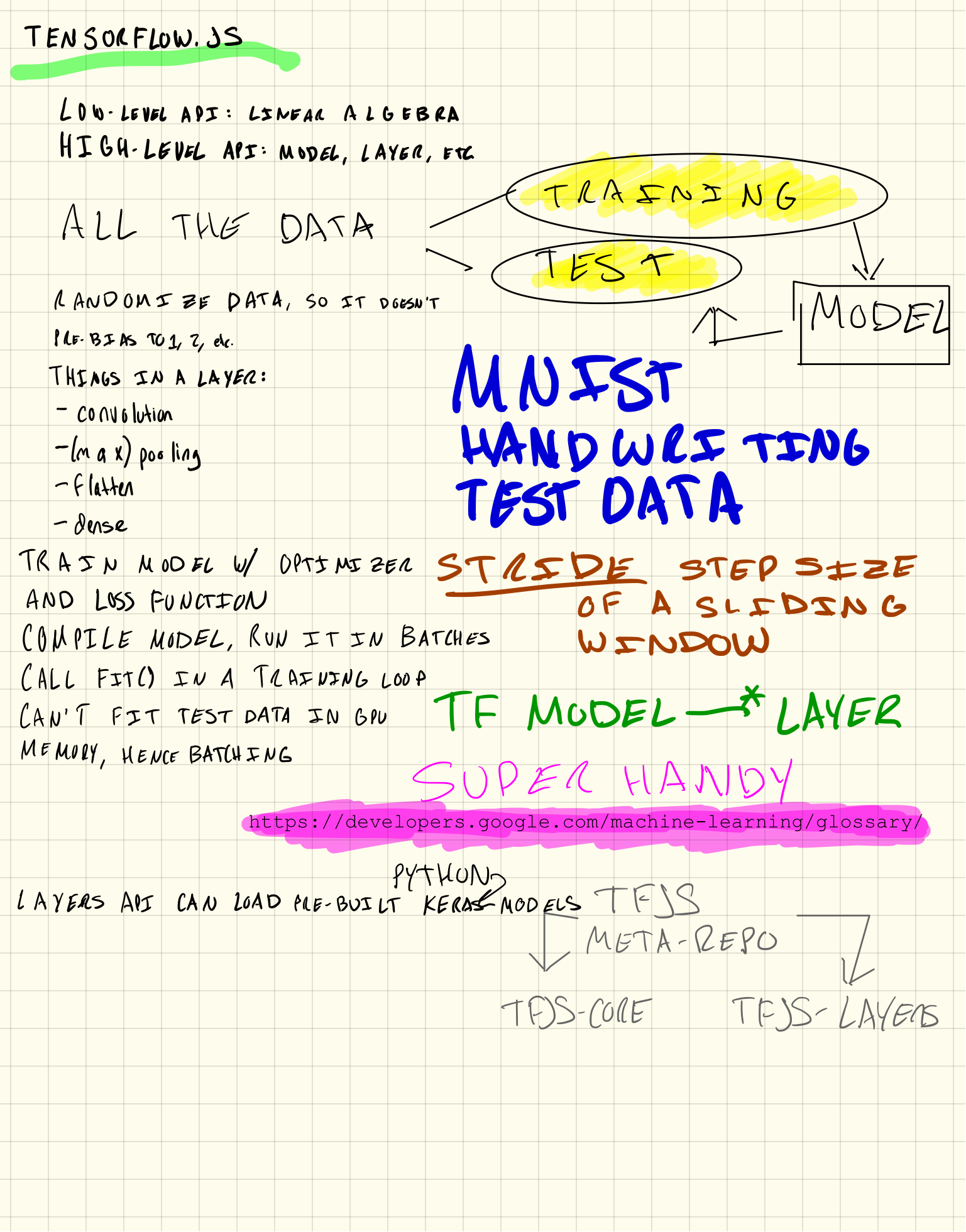

Scribbling through TensorFlow.js

I’ve been trying to wrap my head around machine learning lately. Today I worked through the TensorFlow.js tutorial on recognizing handwritten numbers with a neural network. Herein, my notes and scribbles.

[caption id=“attachment_4714” align=“alignnone” width=“1537”] TensorFlow: it’s about turning linear algebra into models built of layers built of math[/caption]

TensorFlow: it’s about turning linear algebra into models built of layers built of math[/caption]

My previous forays into machine learning left me a little frustrated: I could tell there was language, pattern, and notations to this, but I couldn’t see them from the novelty of new-to-me words like sigmoids, convolution, and hidden layers. Turns out those are part of the language.

But the really handy idioms are encoded in TensorFlow’s high-level model-and-layer API. A model encapsulates a chunk of machine learning that can be trained to classify inputs (images, texts, etc.) based on a mess of training data (pre-classified stuff). Every model is built from a network of layers; layers use linear algebra to transform numbers into classifications.

Once you’ve built a model, you feed it a bunch of training data so that it can learn the coefficients and other number-stuff that goes inside the math-y network. You also provide it with an optimizer and loss function so that as the model is trained, it can know whether its getting better or worse at classifying data.

A really cool thing is you run this training process on your computer’s GPU. GPUs, like machine learning models, are big networks of fast math-y stuff. Beautiful symmetry! On the other hand, you usually can’t fit your training data set into GPU memory, so you end up batching your test data and submitting it to the GPU in loops.

Once all this runs, you’ve got a trained model that can take image inputs (in this case, hand-written digits) and classify them to decimal numbers (0-9). Magic!