Scribbling through TensorFlow.js

I’ve been trying to wrap my head around machine learning lately. Today I worked through the TensorFlow.js tutorial on recognizing handwritten numbers with a neural network. Herein, my notes and scribbles.

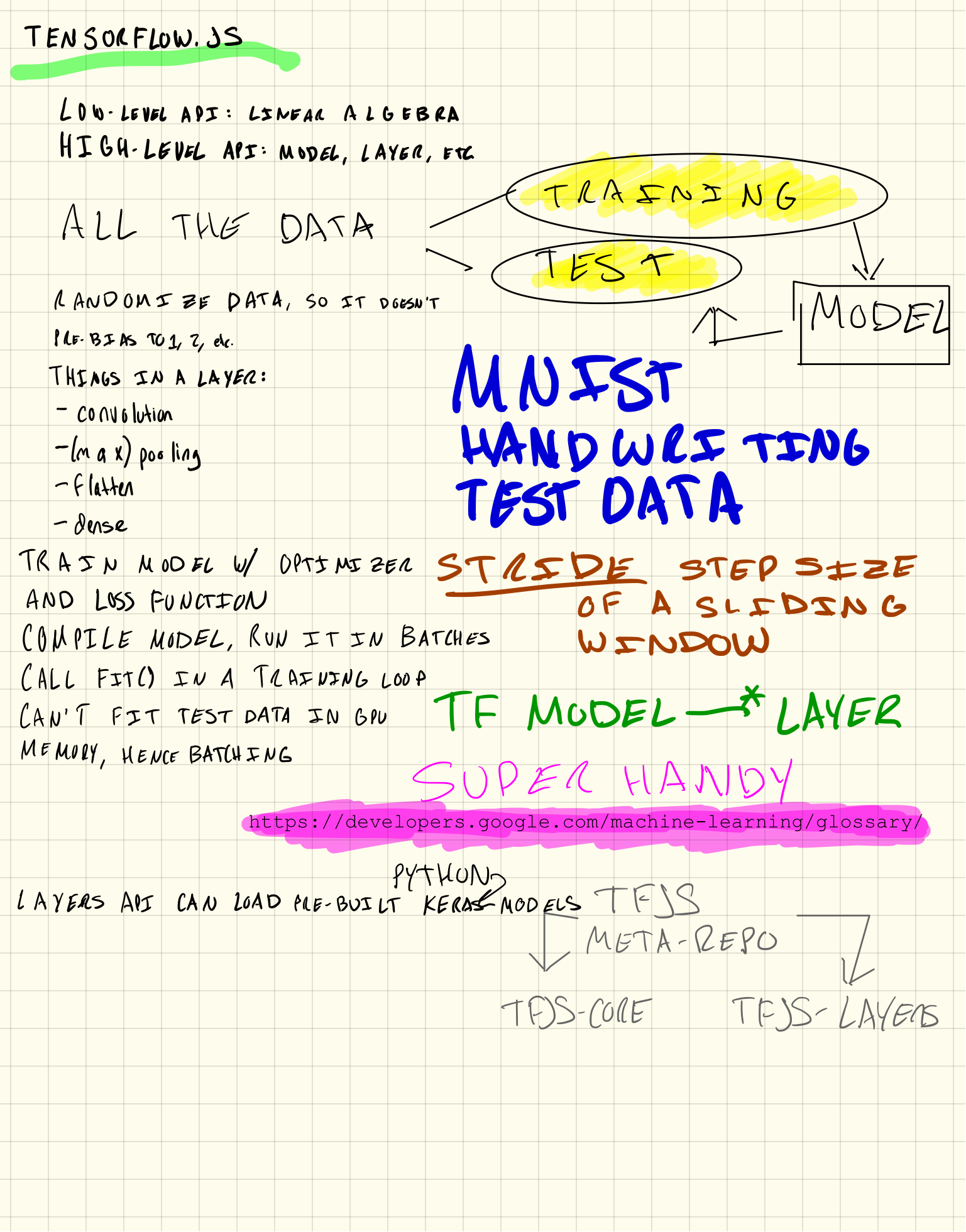

[caption id=“attachment_4714” align=“alignnone” width=“1537”] TensorFlow: it’s about turning linear algebra into models built of layers built of math[/caption]

TensorFlow: it’s about turning linear algebra into models built of layers built of math[/caption]

My previous forays into machine learning left me a little frustrated: I could tell there was language, pattern, and notations to this, but I couldn’t see them from the novelty of new-to-me words like sigmoids, convolution, and hidden layers. Turns out those are part of the language.

But the really handy idioms are encoded in TensorFlow’s high-level model-and-layer API. A model encapsulates a chunk of machine learning that can be trained to classify inputs (images, texts, etc.) based on a mess of training data (pre-classified stuff). Every model is built from a network of layers; layers use linear algebra to transform numbers into classifications.

Once you’ve built a model, you feed it a bunch of training data so that it can learn the coefficients and other number-stuff that goes inside the math-y network. You also provide it with an optimizer and loss function so that as the model is trained, it can know whether its getting better or worse at classifying data.

A really cool thing is you run this training process on your computer’s GPU. GPUs, like machine learning models, are big networks of fast math-y stuff. Beautiful symmetry! On the other hand, you usually can’t fit your training data set into GPU memory, so you end up batching your test data and submitting it to the GPU in loops.

Once all this runs, you’ve got a trained model that can take image inputs (in this case, hand-written digits) and classify them to decimal numbers (0-9). Magic!