Execution and idea in Frontierland

It’s commonly held, and pretty much true, that ideas are shallow and execution is depth. That is, the former is nothing without lots and lots of the latter.

Let’s set aside how “execution over ideas” is used as a bludgeon for a moment. I think there’s possibly a case where “execution AND idea” is a viable recipe for success.

If you’re in a Wild West, converting the minimal version of the idea to an executed offering can be all you need to succeed. Temporarily.

If you’re always moving from emerging market to emerging market, you’re betting not on your ability to execute, but your ability to identify the next market. You’re OK with fast followers building on your idea, iterating on it, and establishing themselves as the market matures. That’s OK, because you’ve already moved on to the next market.

So the risk here isn’t execution, but idea/market selection. When you’re first, it’s slightly OK to ship with a product that will be viewed as laughable once the market is mature. 75% idea, 25% execution.

When you’re second, seventh, or seventeenth, you had better execute on the idea, the business, and the culture you build. It’s 95% execution, 5% idea.

Empathy Required

Nearly fourteen years ago, I graduated college and found my first full-time, non-apprentice-y job writing code. When I wrote code, these were the sorts of things I worried about:

- Where is the code I should change?

- Is this the right change?

- What are the database tables I need to manipulate?

- Who should I talk to before I put this code in production?

Today, I know a lot more things. I did some things right and a lot of things wrong. Now when I write code, these are the sorts of things I worry about:

- Am I backing myself into a corner by writing this?

- Why was the code I'm looking at written this way and what strategy should I use to change it?

- Will this code I just wrote be easy to understand and modify the next time I see it? When a teammate sees it?

- Should I try to improve this code's design or performance more, or ship it?

Half of those concerns are about empathy. They’re only a sampling of all the things I’ve learned I should care about as I write code, but I think the ratio holds up. As I get better and better at programming, as my career proceeds, I need more empathy towards my future self and my teammates.

Further, that empathy needs to extend towards those who are less experienced or haven’t learned the precise things I’ve learned. What works for me, the solutions that are obvious to me, the problems to steer clear of, none of that is in someone else’s head. I can’t give them a book, wait three weeks, and expect them to share my strengths and wisdoms.

That means, when I advise those who listen or steer a team that allows me to steer it, I have to make two camps happy. On one hand, I have to make a decision that is true to what I think is important and prudent. On the other hand, I have to lay out guidelines that lead the listener or teammate towards what I think is important or prudent without micromanagement, strict rules, and other forms of negative reinforcement.

It’s so easy, for me, to just hope that everyone is like me and work under that assumption. But it’s much better, and highly worthwhile, to figure out how to help friends and teammates to level up on their own. It requires a whole lot of empathy, and the discipline to use it instead of impatience. Worth it.

Copypasta, you're the worst pasta

Copypasta. It’s the worst. “I need something like this code here, I’ll just drop it over there where I need it. Maybe change a few things.” Only you can prevent headdesks!

It’s not really possible, in my experience, to make it easier to use code through methods and functions than to just copy what you need and start changing it. No amount of encapsulation or patterns is easier than a pasteboard.

Perhaps, copypasta’s natural predator is a well-informed code review. There are tools, like flay, that can detect some kinds of code duplication.

But for the most part, it’s a battle of dilligence.

(Ed. I found this in my draft folder from four years ago. Copypasta; copypasta never changes.)

Through mocks and back

A problem with double/stub/mock libraries is that they don’t often fail in a total manner. They don’t snap like a pencil when they’re used improperly. Instead, when you use them unwisely, they lay in waiting. At an inopportune time, they leap out.

Change an internal API method name or argument list and your poorly conceived doubles will moan. Rearrange the relationship between classes, and your overly-specific stubs won’t work anymore.

At some point, I felt pretty handy with mocks. Then I wrote a bunch of brittle mocks and decided I needed to go back to square one. I’m through the “just avoid mocks” phase, and now I use them sparingly.

Favor a better API in the code under test, then hand-coded fakes, then stubbed out methods, before finally falling back to a mock. Someone, possibly yourself, will thank you later.

Stevie Wonder, for our times of need

Tim Carmody writing for Kottke.org, Stevie Wonder and the radical politics of love:

Songs in the Key of Life tries to reconcile the reality of the post-Nixon era — the pain that even though the enemy is gone, the work is not done and the world has not been transformed — with an inclusive hope that it one day will be, and that faith, hope, and love are still possible.

It’s what makes the album such a magnificent achievement. But I’m not there. I don’t know when I will be. So for now I’m keeping Songs In the Key of Life on the shelf. An unopened bottle of champagne for a day I may never see. But I’d like to.

On three of Stevie Wonder's best albums, his political writing, and how he bridges saying something and making a good song.

I cannot wait to listen to Songs in the Key of Life again.

How Disney pulls me in

Dave Rupert, Disneyland and the Character Machine:

In October my family took a trip to Disneyland. I couldn’t help but be infected by the magic of Disneyland that allows you to feel young at heart and compells you to wear mouse ears on your head. Walking mile after mile through the park it’s very clear this magic has been painstakingly created and preserved by paying the utmost attention to detail.

There’s magic in paying attention to detail, it’s the slight of hand that helps seamlessly preserve the illusion. At Disney, not only is the illusion preserved, you’re encouraged to take part through cosplay and interacting with your favorite characters. This makes you not just an observer, but also a participant in the ritual.

The level of detail is amazing, completely intentional, and interconnected. You can’t see Tomorrowland from Fantasyland because that would take you out of the moment. You can ask Ana from Frozen about her favorite chocolate because she loves chocolate in the movie.

More than a theme park, Disneyland and Disney World are movie-like experiences you can walk around in, immersed in the joy and excitement of the setting and story.

Does an unadvertised extension point even exist?

There was an extension point, but I missed it.

I was adding functionality to a class. I needed to add something that seemed a little different, but not too far afield, from what the existing code was doing. So I came up with a good name, wrote a method, and went about my day.

A few weeks later, trying to understand an obscure path through this particular class, I found the extension point I should have been using. On one hand, eureka! On the other hand, why didn’t I notice this in the first place?

Did I not consider the open/closed principle enough? Perhaps my “modification” sense should have tingled, sending me to create a new object to encapsulate the behavior with.

Was the extension point hidden by indirection? Perhaps the change going into an ActiveRecord model through me off; I was doing as you’d normally do in a model. I wasn’t expecting another layer of abstraction.

Were my changes too scattered amongst many files? I had modifications in a half-dozen files, plus their tests. It’s possible I was juggling too many things.

Probably it’s all of these things. Lesson learned: when I get to feeling clever and add a handy extension point to make the next person’s job easier, advertise that extension point and make it clear this is probably where they want to make their change.

Perhaps there’s a benign explanation for Paul Ryan appearing to have cut off his phones. Anecdotally, it does not seem GOP Congresscritters are putting much effort into their voicemails or phone lines. I called my representative, Lamar Smith, yesterday afternoon, incensed that he had suggested we listen to Trump and not the media. Both his DC and Austin voicemails were full. I was able to get through this morning and spoke with a staffer who was dismissive but polite.

This practice of neglecting voicemails and only dismissively answering phones during office hours is appalling. The job of our Congress is to represent us. They cannot do that job if they aren’t taking every voicemail, phone, and email into account. Very rarely do I get the feeling Congress wants to even appear they are doing their job.

If I didn’t answer my work email, I’d lose my job. But Congresspeople don’t lose their jobs except for during an election or certain kinds of partisan maneuverings.

Fire Congress anyway. I’ve started with the phones and a whole lot of pent-up frustration. Maybe the infernal hell of fax machines is next?

The TTY demystified. Learn you an arcane computing history, terminals, shells, UNIX, and even more arcanery! Terminal emulators are about the most reliable, versatile tools in my not-so-modern computing toolkit. It’s nice to know a little more about how they work, besides “lots of magic ending in -TY”, e.g. teletypes, pseudo-terminals, session groups, etc.

Clinton Dreisbach: A favorite development tool: direnv. I’ve previously used direnv to manage per-project environment variables. It’s easy to set up and use for this. I highly recommend it! But, I’d never thought of using it to define per-project shell aliases as Clinton does. Smart!

Our laws are here, they just aren't equally practiced yet

That thing where institutions like the FBI are prohibited by law from meddling with presidential elections, and then the FBI meddled multiple times. We’re just going to let that slide? Seems like it!

The point is not there was one big injustice, which there was. The point is that justice has been distributed unevenly through your history. Outcomes favored by those in power but obtained illegally have long been effectively legal. Those out of power have always felt the full brunt of the law, and even worse.

The inequality and imperfection by which our law has always been practiced. That’s the lesson.

Journalism for people, not power

Journalism is trying very hard to do better, but still failing America. Media is covering politics and not “We, the people”.

Take the coverage of the Republican attempt to neuter congressional oversight and subsequent retreat amidst tremendous scrutiny. Coverage typically read “Donald Trump tweeted about this and by the way a ton of people called their congressperson.” The coverage is focused on what a person in power says. A fascination with celebrity and power.

It’s not focused on the readers, or the people who bear the actions of politicians. Certainly not the disadvantaged who can’t even keep up with politics because they have neither a) the money for a newspaper subscription or b) the time to follow it all between multiple jobs and possibly a family.

It’s focused on what politicians are saying the people want. It’s easy to get a politician to talk about this. That’s part of their job now, skating the public discourse towards the laws they want to pass.

It’s focused on what think tanks want to talk about. Those talking heads on TV and think pieces on the opinion pages? It’s easy to get those people to talk because they’re paid to do those things by giant lobbies and interest groups. They’re paid to get in front of people and tell them what laws they should want.

Journalism should counteract these extensions of the corporate state. When a politician, funded by a lobby, says “the people want affordable health care”, a print or television journalist should say “and here’s what three people not involved in politics actually said”. Maybe they’ll agree with their politicians, maybe they won’t!

When a politician says “we should lower taxes on the top tax bracket”, media should follow up with that that means. Who exactly gets that tax break? Will actually benefit other people? What do people who don’t benefit from that tax break gain or lose because of it.

The next news cycle will come up, the politicians will say one thing. The truth and tradeoffs will reflect another truth. The journalists will go out there and talk about the tradeoffs and what people think now. And then maybe we’ll get a more educated society.

Likely this means the sports and entertainment pages have to subsidize the political coverage. Or we need to start recognizing pieces stuffed with quotes from think tanks and politicians as “advertorial” and not news.

Regardless, political journalism as zero-sum entertainment has to go.

I love overproduced music

It seems like some folks don’t like music with a lot of studio work. Overproduced, they call it. Maybe this is a relic of the days when producers weren’t a creative force on par with the actual performers and artists.

I don’t know, because I love overproduced music. Phil Spector, “Wall of Sound”? Bring it. Large band efforts like “Sir Duke”, “You’re not from Texas”, or “Good Vibrations”? Love it. Super-filtered drum sound? Gotta have it.

It probably has everything to do with, at one point, wanting to pursue a career as a double bassist in symphony orchestras. The pieces I loved the most were the big Romantic tone poems and symphonies with a chorus. Hundreds of people, dozens of unique parks, all playing at the same time, often loudly. It’s the essence of overproduced.

Here’s a curious thing. When I hear “Wouldn’t it be nice?” in my head, it’s much bigger and Wagnerian than it is on Pet Sounds. The pedal tone is bigger and more prominent, the first note after the guitar intro is massive. Maybe I’m just projecting my interpretation onto the song.

Contrast to “Good Vibrations”. There’s always more going on than I remember. Vocal parts, instruments. Sooooo good.

Outside of Brian Wilson, I’ve noticed Jeff Lynne is amongst “the overproducers”. Especially, apparently, how he thins out drum sounds. Love it. Have I ever told you how much I dislike the sound of an raw snare drum?

Turns out Ruby is great for scripting!

Earlier last year, I gave myself two challenges:

- write automation scripts in Ruby (instead of giving up on writing them in shell)

- use system debugging tools (strace, lsof, gdb, etc.) more often to figure out why programs are behaving some way

Of course, I was almost immediately stymied on the second one:

[code lang=text] sudo dtruss -t write ruby -e "puts 'hi!'" Password:

dtrace: failed to execute ruby: dtrace cannot control executables signed with restricted entitlements [/code]

dtruss is the dtrace-powered macOS-equivalent of strace. It is very cool when it works. But. It turns out Apple has a thing that protects users from code injection hijinks, which makes dtrace not work. You can turn it off but that requires hijinks of its own.

I did end up troubleshooting some production problems via strace and lsof. That was fun, very educational, and slightly helpful. Would do again.

I did not end up using gdb to poke inside any Ruby programs. On the whole, this is probably for the better.

I was more successful in using Ruby as a gasp scripting language. I gave myself some principles for writing Ruby automation:

- only use core/standard library; no gem requires, no bundles, etc.

- thus, shell out to programs likely to be available, e.g.

curl - if a script starts to get involved, add subcommands

- don’t worry about Ruby’s (weird-to-me) flags for emulating sed and awk; stick to the IRB-friendly stuff I’m used to

These were good principles.

At first I tried writing Ruby scripts as command suites via sub. sub is a really cool idea, very easy to start with, makes discovery of functionality easy for others, and Just Works. You should try it some time!

That said, often I didn’t need anything fancy. Just run a few commands. Sometimes I even wrote those with bash!

But if I needed to do something less straightforward, I used template like this:

[code lang=text] #!/usr/bin/env ruby

HELP = <<-HELP test # Run the test suite test ci # Run test with CI options enabled test acceptance # Run acceptance tests (grab a coffee….) HELP

module Test module_function

def test

rspec spec

end

… more methods for each subcommand

end

if __FILE == $0 cmd = ARGV.first

case cmd when "ci" Test.ci

… a block for each subcommand

else Test.test end end [/code]

This was a good, friction-eliminating starting skeleton.

The template I settled on eliminated the friction of starting something new. I’d write down the subcommands or workflow I imagined I needed and get started. I wrote several scripts, delete or consolidated a few of them after a while, and still use a few of them daily.

If you’re using a “scripting” language to build apps and have never tried using it to “script” things I “recommend” you try it!

Tinkers are a quantity game, not a quality game

I spend too much time fretting about what to build my side projects and tinkers with. On the one hand, that’s because side projects and tinkers are precisely for playing with things I normally wouldn’t get a chance to use. On the other hand, it’s often dumb because the tinker isn’t about learning a new technology or language.

It’s about learning. And making stuff. Obsessing over the qualities of the build materials is besides the point. It’s not a Quality game, it’s a Quantity game.

Now if you’ll excuse me I need to officiate a nerd horserace between Rust, Elm, and Elixir.

Jobs, not adventures

Earlier this year, after working at LivingSocial for four years, I switched things up and started at ShippingEasy. I didn’t make much of it at the time. I feel like too much is made of it these days.

These are jobs, not adventures.

It has, thankfully, become cliché to get excited about the next adventure. Instead, I’m going to flip the script and tell you about my LivingSocial “adventure”.

- Once upon a time I joined a team with all the promise in the world

- And as a sharp person I’d meet there told me, the grass is always greenish-brown, no matter how astroturf-green it seems from the outset

- I wrestled a monolith (two, depending on how you count)

- I joined a team, attempted to reimplement Heroku, and fell quite a bit short

- I wandered a bit, fighting little skirmishes with the monolith and pulling services out of it

- I ended up in light management, helping the people taking the monolith head on

- I gradually wandered up to an architectural tower, but tried my best not to line it in ivory

- I had good days where stuff got done in the tower

- And I had days where I feng shui’d the tower without really moving the ball forward

- In April, it was time for me to hand the keys to the tower over to other sharp folks and spread what I’ve learned elsewhere

- In the end, I worked with a lot of smart and wonderful people at LivingSocial.

Sadly, there was no fairy tale ending. About a third of the people I worked with ended up leaving before I did. Another third were laid off in the nth round of layoffs just after I left. The other third made it all the way through to Groupon’s acquisition of LivingSocial.

It was not a happy ending or a classic adventure. It was an interesting, quirky tale.

Fascinating mechanical stories

I already wrote about cars as appliances or objects, but I found this earlier germ of the idea in my drafts:

There’s an in-betweenish bracket where prestige, social signaling, or bells and whistles count a bit more. The Prius and Tesla are social signals. Some folks get a Lexus, Acura, Infiniti, BMW, Mercedes-Benz, etc. for the prestige more than the bells and whistles.The weird thing about e.g. BMW, Porsche, or Ferrari is how much enthusiasts know about them. The history, the construction, the internal model numbers, the stories. I suspect you can tell a prestige BMW owner from an enthusiast BMW owner if they can tell you the internal model number of their car.

My first thought, when I came across this, was this is a pretty good bit of projection and rationalization on my part ;) But it’s not hard to look into the fandom of any of those ostensibly-prestige brands like BMW or Porsche and find communities that refer to BMWs not as 3- or 5-series but as E90s or E34s (mine is an F30) and Porsches as 986 or 996 instead of the 911 marketing number. So I’m at least a little right about this!

I will never experience driving the majority of cars out there. I may never know how an old BMW compares to a newer one or properly hear an old Ferrari V-12. I can partake of the enthusiasm about their history, engineering, and idiosyncrasies. That’s the big attraction for me: the stories.

The lesser known vapors and waves

There’s a thing going on in music with all the vapors and chills and waves. I’m not entirely sure what it is, yet. Even after reading this excellent survey of the various vaporwave subgenres, I’m still not sure what it is. But it’s very synth-y, a little sample-y, and very much what you’d expect to hear in a hip, contemporary hotel lobby.

Connective blogging tissue, then and now

I miss the blogging scene circa 2001-2006. This was an era of near-peak enthusiasm for me. One of those moments where a random rock was turned over and what lay underneath was fascinating, positive, energizing, captivating, and led me to a better place in my life and career.

As is noted by many notable bloggers, those days are gone. Blogs are not quite what they used to be. People, lots of them!, do social media differently now.

Around 2004, amidst the decline of peer-to-peer technologies, I had a hunch that decentralized technology was going to lose out to centralization. Lo and behold, Friendster then MySpace then Facebook then Twitter made this real. People, I think, will always look to a Big Name first and look to run their own infrastructure nearly last.

In light of that, I still think the lost infrastructure of social media is worth considering. As we stare down the barrel of a US administration that is likely far less benevolent with its use of an enormous propaganda and surveillance mechanism, should we swim upstream of the ease of centralization and decentralize again?

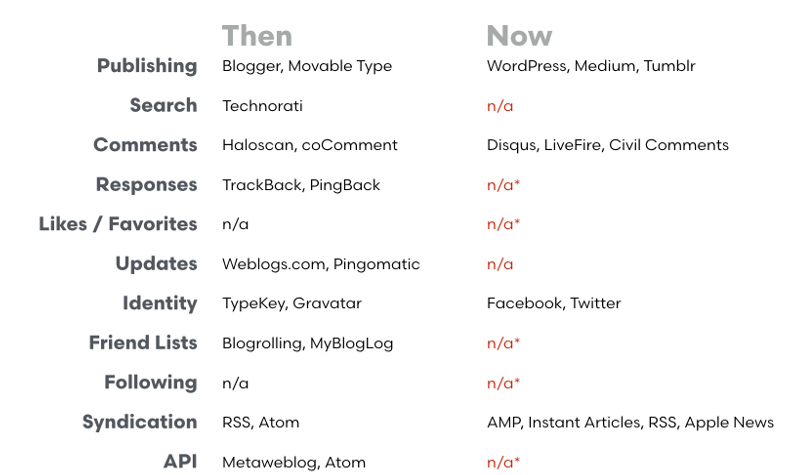

Consider this chart identifying community and commercially run infrastructure that used to exist and what has, in some cases, succeeded it:

[caption id=“attachment_3832” align=“alignnone” width=“796”] Connective tissue, then and now[/caption]

Connective tissue, then and now[/caption]

I look over that chart and think, yeah a lot of this would be cool to build again.

Would people gravitate towards it? Maybe.

Could it help pop filter bubbles, social sorting, fake news and trust relationships? Doesn’t seem worth doing if it can’t.

Do people want to run their identity separate of the Facebook/Twitter/LinkedIn behemoth? I suspect what we saw as a blog back then is now a “pro-sumer” application, a low cost way for writers, analysts, and creatives to establish themselves.

Maybe Twitter and Facebook are the perfect footprint for someone who just wants to air some steam about their boss, politics, or a fellow parent? It’s OK if people want to express their personality and opinions in someone else’s walled garden. I think what we learned in 2016 is that the walled gardens are more problematic than merely commercialism, though.

That seems pessimistic. And maybe missing the point. You can’t bring back the 2003-6 heyday of blogging a decade later. You have to make something else. It has to fit the contemporary needs and move us forward. It has to again capture the qualities of fascinating, positive, energizing, captivating, and leading to a better place.

I hope we figure it out and have another great idea party.

On recent Mercedes-Benz dashboard designs

Mercedes (is it ok if I call you MB?), I think we need to talk. You’re doing great in Formula 1, congratulations on that! That said, you’ve gone in a weird direction with your passenger car dashboards. I suspect there are five different teams competing to win with these dashboards and I don’t think anyone, especially this car enthusiast, is winning overall.

Here’s your current entry-level SUV, the GLA. If my eye is correct, this is one of your more dated dash designs:

[caption id=“attachment_3827” align=“alignnone” width=“634”] All the buttons![/caption]

All the buttons![/caption]

Back in the day, I think, you had someone on staff at MB whose primary job was to make sure your dashboards had at least 25 buttons on them. This was probably a challenging job before the advent of the in-car cellular phone. However, once those became common, that was 12 easy buttons if you just throw a dial pad onto the dash. And you did!

So it’s easy to identify this as an older design from the dozens (43) of buttons. But the age of this design also shows from the LCD. One, it’s somewhat small. Two, and more glaringly, you simply tacked the LCD onto the dashboard. What happened here? Did you run out of time?

I think you can do better. The eyeball vents are nice though!

Now let’s look at a slightly more modern, and much further upscale, design. Your AMG GT coupe:

[caption id=“attachment_3828” align=“alignnone” width=“673”] All the suede[/caption]

All the suede[/caption]

OK so you lost most of the buttons in favor of bigger, chunkier buttons. That’s good! You also made a little scoop in the dash for the LCD. That’s progress, but the placement still feels awkward. I know that’s where all your luxury car friends put the LCD now, but you’re so dominant in F1, maybe you can do better here too?

Can we take a moment to talk about the interactions a little? Your take on the rotary control is a little weird. You’ve got one, and it’s got a little hand rest on top of it. That seems good. But then the hand rest is also a touch interface for scribbling letters? Seems weird! I’ve never used that, but I’m a little skeptical.

Next, you’ve gone through some weird stuff with your shifters. You had a really lovely gated shifter on the S-class couple as long ago as the early 90’s! Lately you’ve tried steering column shifters, and now it seems you’ve settled on a soap-shaped chunk of metal that you move up and down to change directions and put it in park. I feel like you should give up the physical shifter thing and just go with (you’re gonna like this) more buttons.

My parting thought on the GT’s interior is this: width. Your designs impart a sense of tremendous girth in the dash, making the car feel bigger. We’ll come back to that immediately…

Finally, one of your most recent designs, the E-class sedan:

[caption id=“attachment_3829” align=“alignnone” width=“867”] All the pixels![/caption]

All the pixels![/caption]

Again with a regal sense of width. Personally, I don’t like it. It makes your car seem like a giant sofa.

You are making great progress on reducing the number of buttons. Again, kudos.

OK, clearly you got a great deal on LCD panels. Plus, an almost equally good deal on eyeball vents. Good for you!

Also you put an analog clock on the dash. So that’s nice.

I don’t think we’re going to like this giant piece of software and glass thing for very long. Did you see Her? There are hardly any displays in it. All the computers are somehow inhabited by the characters, either by talking to them or interacting within a projection. Why take one of your classic instrument clusters, make that an LCD, and occasionally project information onto the windshield if the driver or passenger needs to see it there? Just a thought!

I’m a little split on the design of your wheel there. It’s nice that technically it’s a 3-spoke design but really, if you count, it’s 4 spokes. The lamest number of spokes. Perhaps with the split you were trying to add more negative space and perhaps evoke a very old, SL-like 2-spoke design? That’s a nice gesture, but I think you missed here. Surely you could engineer a straight-up 2-spoke wheel?

In summary:

- fewer buttons, less noticeable screens, more seamless interactions

- a few retro design elements (eyeball vents, analog clocks) are great, too many is too much

- reduce your five design teams (screens, buttons, wheels, interactions, A/C) down to two: driving interactions and auxiliary interactions

Hope that helps!