2023

Build for the excitement of building

Nice Tietz-Sokolskaya, Write more “useless” software:

When you spend all day working on useful things, doing the work, it’s easy for that spark of joy to go out. … Everything you do is coupled with obligations and is associated with work itself.

You lose the aspect of play that is so important.

Writing useless software is a great way to free yourself from those obligations. If you write something just to play, you define what it is you want out of the project. You can stop any time, and do no more or less than you’re interested in. Don’t want to write tests? Skip them. Don’t want to use an issue tracker? Ditch it. Finished learning what you wanted to? Stop the project if it’s not fun anymore!

Build for self-learning, to tell a joke, to get your mind off something, as methodical practice, or so you can write about it.

You don’t always have to build for lofty open-source principles, entrepreneurial hustle, or because someone told you to!

Related: Whyday is August 19, plenty of time to plan your next frivolous hack.

The pace you’re reading is the right pace for you to read

Ted Gioia, My Lifetime Reading Plan:

IT’S OKAY TO READ SLOWLY

I tell myself that, because I am not a fast reader.

By his accounts, Gioia is a prolific and thorough reader. And yet, a self-proclaimed non-speed-reader.

You don’t have to read super-fast if you’re always reading whatever is right for you at the moment. Doubly so if you’re deeply/actively reading, searching for understanding or assimilating ideas into your own mental arena.

Once more for the slow readers, like myself, in the back: it’s fine, just keep reading!

Inside you, there are two or more brains

David Hoang, Galaxy Brain, Gravity Brain, and Ecosystem Brain:

The Galaxy Brain thinkers are in 3023 while we're in 2023. They relentlessly pursue the questions, “What if?” Imagination and wonder fuel the possibilities of what the world could be.

…

I believe every strong team requires skeptics and realists. Introducing, the Gravity Brain. Don't get it twisted in thinking this is negative—it's a huge positive. If you say "jump," to a Gravity Brain person, they won't say, "how high?" Instead, they say, "Why are we jumping? Have we considered climbing a ladder? Based on the average vertical jump of humans on Earth, this isn't worth our time." Ambition and vision don’t matter if you don’t make progress towards them.

…

Ecosystem Brains think a lot of forces of nature and behaviors. They are usually architects and world builders. When they join a new company, they do an archeological dig to understand the history of society, language, and other rituals.

I’m a natural gravity brain and occasional ecosystem brain. I aspire to galaxy brain, but often get there by of proposing a joke/bad idea to clear my mind and get to the good ideas.

Which one are you?

Aristotle’s ethical means of virtue and vice but for creative work:

- Winning is the mean between moving the goal-lines to finish and not finishing due to non-constructive goal-lines

- Quality is the mean between piles of incomplete junk and one or two overwrought ideas

- Taste is the mean between copying and invention in a vacuum of influences

- Flow is the mean between distraction and idleness

- Iteration is the mean between doing it once because you nailed it and doing it once because you gave up

I’m not your cool uncle

I find that playing the “I’m the leader, this is the decision, go forth and do it” card is not fun and almost always wrought with unintended consequences.

A notable exception is teams that fall back to working in their own specialized lanes1 and throwing things over the wall. Really, the issue is throwing things over the wall, working in your lane isn’t inherently harmful2. Playing the leader card and insisting folks work across specialization in their lane or closely with a teammate whose skills complement their own has fixed more things than it complicated. More specifically, the complications were the kind of difficulties a high-functioning team would face anyway, i.e., “high-quality problems”.

Now I wonder what other scenarios justify playing the power dynamic or “I’m not your cool uncle” card to escape an unproductive local maximum.

The Textual framework

Textual is a Rapid Application Development framework for Python, built by Textualize.io.

Build sophisticated user interfaces with a simple Python API. Run your apps in the terminal and (coming soon) a web browser.

Works across operating systems and platforms: terminal, GUI, and web (apparently). Uses CSS for styling internally. A wild implementation choice, but probably practical for adoption!

May many more, modern/practical Visual Basic 6/Delphi-like tools bloom. 🤞

Stop writing

George Saunders on getting past self-critical/low-energy writing spirals (aka one of the many forms of writer’s block):

Another thing I sometimes suggest is this: stop writing. That is, stop doing your “real” writing. Yank yourself out of your usual daily routine. Instead, today, off the top of your head, write one sentence. Don’t think about what it should be about, or any of that. Just slap some crazy stuff down on the page. (Or it can be sane stuff. The “slapping down” is the key.) Print it out. Then, go do something else and, over the next 24 hours, do your best not to give that sentence a single thought.

The complete method, in my own words:

- Start with a sentence, nothing in particular. Just start.

- Return to that sentence daily, making additions or changes that feel right.

- As the thing grows from a sentence to a paragraph and so on, spend more time with it. But don’t sweat the magnitude of the output. A trivial punctuation change is “a good day’s work”.

- Get bolder in the changes. Do what you prefer and don’t worry about why it is you gravitate towards that particular change.

- Resist the urge to switch from working on an exercise to working your routine/process. Keep growing it instinctively.

- Eventually you have a whole story/essay/whatever written this way and you’re happy to do something with it.

- Now you are un-stuck.

(Am I using this post to get past a lull in publishing? Yes.)

Build with language models via llm

llm (previously) is a tool Simon Willison is working on for interacting with large language models, running via API or locally.

I set out to use llm as the glue for prototyping tools to generate embeddings from one of my journals so that I could experiment with search and clustering on my writings. Approximately, what I’m building is an ETL workflow: extract/export data from my journals, transform/index as searchable vectors, load/query for “what docs are similar to or match this query”.

Extract and transform, approximately

Given a JSON export from DayOne, this turned out to be a matter of shell pipelines. After some iterations with prompting (via Raycast’s GPT3.5 integration), I came up with a simple script for extracting entries and loading them into a SQLite database containing embedding vectors:

#!/bin/sh

# extract-entries.sh

# $ ./extract-entries Journals.json

file=$1

cat $file |

jq '[.entries[] | {id: .uuid, content: .text}]' |

llm embed-multi journals - \ # [1]

--format json \

--model sentence-transformers/all-MiniLM-L6-v2 \ # [2]

--database journals.db \

--store

A couple things to note here:

- The placement of the

-parameter matters here. I’m used to placing it at the end of the parameter list, but that didn’t work. Thellm embed-multidocs suggest that--inputis equivalent, but I think that’s a docs bug (the parameter doesn’t seem to exist in the released code). - I’m using locally-run model to generate the embeddings. This is very cool!

In particular, llm embed-multi takes one JSON doc per line, expecting id/content keys, and “indexes” those into a database of document/embedding rows. (If you’re thinking “hey, it’s SQLite, that has full search, why not both: yes, me too, that’s what I’m hoping to accomplish next!)

I probably could have just built this by iterating on shell commands, but I like editing with a full-blown editor and don’t particularly want to practice at using the zsh builtin editor. 🤷🏻♂️

Load, of a sort

Once that script finishes (it takes a few moments to generate all the embeddings), querying for documents similar to a query text is also straightforward:

# Query the embeddings and pretty display the results

# query.sh

# ./query.sh "What is good in life?"

query=$1

llm similar journals \

--number 3 \

--content "$query" |

jq -r -c '.content' | # [1]

mdcat # [2]

Of note, two things that probably should have been more obvious to me:

- I don’t need to write a for-loop in shell to handle the output of

llm similar;jqbasically has an option for that - Pretty-printing Markdown to a terminal is trivial after

brew install mdcat

I didn’t go too far into clustering, which also boils down to one command: llm cluster journals 10. I hit a hiccup wherein I couldn’t run a model like LLaMa2 or an even smaller one because of issues with my installation.

Things I learned!

jqis very good on its own!- and has been for years, probably!

- using a copilot to help me take the first step with syntax using my own data is the epiphany here

llmis quite good, doubly so with its growing ecosystem of plugins- if I were happier with using shells, I could have done all of this in a couple relatively simple commands

- it provides an adapter layer that makes it possible to start experimenting/developing against usage-priced APIs and switch to running models/APIs locally when you get serious

- it’s feasible to do some kinds of LLM work on your own computer

- in particular, if you don’t mind trading your own time getting your installation right to gain independence from API vendors and usage-based pricing

Mission complete: I have a queryable index of document vectors I can experiment with for searching, clustering, and building applications on top of my journals.

Read papers, work tutorials, the learning will happen

(Previously: Building a language model from scratch, from a tutorial)

I started to get a little impatient in transcribing linear algebra code from the tutorial into my version. In part, it’s tedious typing. More interesting, GitHub Copilot trying to autocomplete the code was sometimes right and sometimes the wrong idiom and actively deciding which was which compounded the tedium. This is a big lesson for our “humans-in-the-loop supervise generative LLMs” near-future. 😬

OTOH, when I got to the part where an attention head was implemented, it made way more sense having read Attention is All You Need previously. That feels like a big level-up: reading papers and working through implementations thereof brings it all together in a big learning moment. Success! 📈

Building a language model from scratch, from a tutorial

I’m working from Brian Kitano’s Llama from scratch (or how to implement a paper without crying). It’s very deep, so I probably won’t make it all the way through the long-weekend I’ve allocated for it.

I’ve skimmed the paper, but didn’t pay extremely close attention to the implementation details. But the tutorial provides breadcrumbs into the deeply math-y bits. No problems here.

I noticed that there are Ruby bindings for most of these ML libraries and was tempted to try implementing it in the language I love. But I would rather not get mired in looking up docs or translating across languages/APIs. And, I want to get more familiar with Python (after almost twenty years of not using it).

I started off trying to implement this like a Linux veteran would, as a basic CLI program. Nonetheless I switched over to Jupyter as it looks like part of building models is analyzing plots and that’s not going to go well on a CLI. And, so I’m not swimming upstream so much.

Per an idea from Making Large Language Models work for you, I’m frequently using ChatGPT to quickly understand the PyTorch neural network APIs in context. Normally, I’d go on a time-consuming side-quest getting up to speed on an unfamiliar ecosystem. ChatGPT is reducing that from hours and possibly a blocker to a few minutes. Highly recommend reading those slides and trying a few of the ideas in your daily work.

I wonder how Vonnegut might have coped with the acceleration of change we cope with in our modern dilemma.

It’s just a hell of a time to be alive, is all—just this goddamn messy business of people having to get used to new ideas. And people just don’t, that’s all. I wish this were a hundred years from now, with everybody used to the change.

– Kurt Vonnegut, Player Piano

He wrote so much about time, traversing it and getting unstuck in it, that I think he might have shrugged and stuck to an earlier motif: So it goes.

Link directly to stations in Apple Music

Generate Apple Music URLs via Apple Music Marketing Tools. Query by song, album, basically anything you can search in the Music.app sidebar.

e.g. WEFUNK radio, long URL: https://tools.applemediaservices.com/station/ra.1461224745?country=us

Purple Current, short URL: https://apple.co/3sCvX8o

Marfa Public radio, 98.9 KMKB: https://apple.co/3sw6LRd

Handy for building Shortcuts to specific stations! Apple still doesn’t provide direct navigation to streams, in the year 2023 🤦♂️.

I got better at estimating projects with intentional practice

I like the idea of practicing1, in the musical or athletic sense, at professional skills to rapidly improve my performance and reduce my error rate. When I was a music major2, I spent hours practicing ahead of rehearsals, lessons, and performance. Until recently, I was unable to conceive of how I might do the same for leadership.

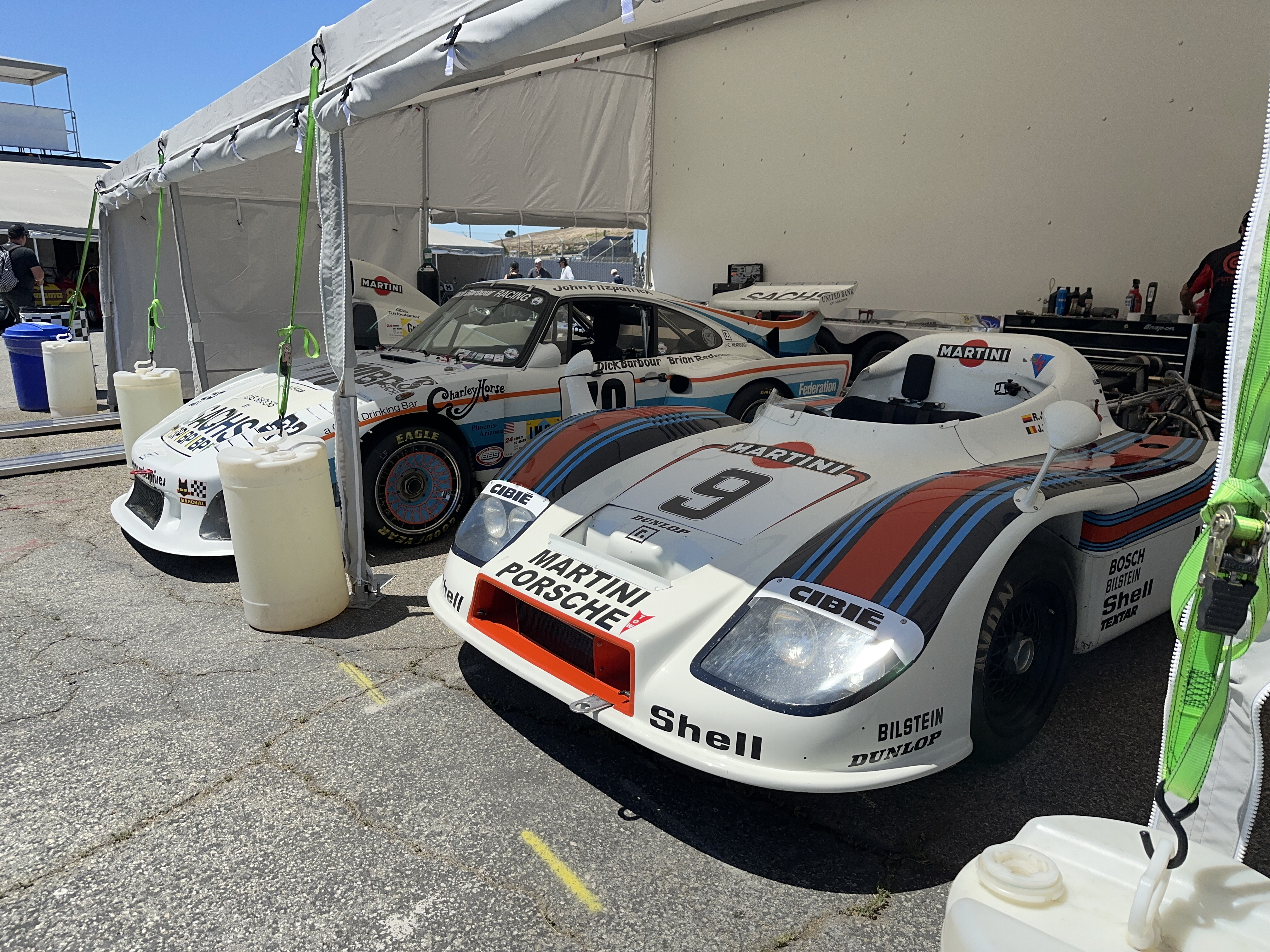

I dropped in on historic races (the newest cars were 30 years old) at Laguna Seca. It’s great to see the track at scale, not as video-game or television camera angle. I watched practice sessions from the grand stand, heard the and watched the cars rip by at competitive speeds.

I walked the paddock and infield. So many good cars to see. Felt the energy of mechanics getting cars, sometimes older than me, ready to go fast on track. I smelled a lot of gasoline and oil.

It was a good day.

This is where we live, for a few days. I’m hoping “little adventures” like this are a nice compliment to vacations. Perhaps the former creates new mental connections and the latter are about disconnecting to consider and build upon existing connections.

“Now a truth,” said the judge. “The main business of humanity is to do a good job of being human beings,” said Paul, “not to serve as appendages to machines, institutions, and systems.”

– Kurt Vonnegut, Player Piano

We’re here to do human things: create for each other, love our friends and family, support our communities, experience and protect our world.

A life serving only algorithms wrought by corporations1, engaged with organizations that care more about themselves than their people, or partaking in eco-cultural regimes that disregard our humanity are not worth pursuing.

-

IOW, don’t throw your whole existence into being a content creator, folks. ↩︎

A vacation is a tool for disconnecting

I’m reflecting1 on travelogue’ing my recent trip to Disneyland. In particular, that it was effective at taking my mind off work while maintaining creative writing and publishing habits.

Lately, I find myself dwelling on work too much or noodling through various puzzles I’m trying to solve. I would rather not bring that with me on vacation. What I removed and what I replaced those thoughts with went pretty well! For my own memory, and perhaps dear reader’s benefit:

Allow plenty of moments to just exist. Listen, enjoy where you are. Engage with the folks you’re vacationing with, presuming they’re not in a phone-bubble. Try to put the phone away after you mindlessly open it out of boredom. Cultivate the moments of boredom, if you can.

Don’t look at feeds like Slack, Discord, socials, etc. I took the extra step of removing Slack from my phone entirely. It’s easier to remove it entirely and re-add each Slack later than to try to ignore particular instances whenever I find myself mindlessly opening the app. Read a lot, ideally longer form things chosen beforehand. Again: ignore the feeds of people hustling or trying to get their ideas into my attention. Think your own thoughts!

Replace all those feeds and work-think with written and photographic journaling. Look for photography opportunities, even if you’re not particularly good at it (yet). Write in a journal, daily, even if you’re not good at it (yet). Write about what you’ve photographed, about whatever you’re thinking while disconnected, about that conversation you just had or the novel thing you just saw/did.

If you want to share via social networks, do it once a day. I posted (via micro.blog and Ulysses) a short reflection on each day. For texture, I included a quad of photos, and wrote a few sentences to reflect on my photography or day so far. Once the post is made, put the computer away and get back to reveling in disconnection.

YMMV, but I hope you try it!

-

With apologies to Craig Mod for standing on the shoulders of A Walk is a Platform for Creative Work. ↩︎

A pretty ideal travel day: start at the pool, wait in the breeze and shade, depart from the open tarmacs of tiny Long Beach Airport.

Not pictured: returning to the searing heat of central Texas. 😬🙃🫠

Many parts of Disneyland are ridiculously easy to photograph.

Particularly at dusk and night, and for folks (like me) with very rudimentary photography skills. I’m pretty convinced this is one of the myriad details the Imagineers wrangle as they design these parks.