Build

exa in 30 seconds

What is it? exa is ls reimagined for modern times, in Rust. And more colorfully. It is nifty, but not life-changing. I mostly still use ls, because muscle memory is strong and its basically the only mildly friendly thing about Unix.

How do I do boring old ls things?

Spoiler alert: basically the same.

ls -a:exa -als -l:exa -lls -lR:exa -lR

How do I do things I rarely had the gumption to do with ls?

exa -rs created: simple listing, sort files reverse by created time. Other options:name, extension, size, type, modified, accessed, created, inodeexa -hl: show a long listing with headers for each columnexa -T: recurse into directories alatreeexa -l --git: showgitmetadata alongside file info

Generalization and specialization: more of column A, a little less of column B

- Now, I attempt to write in the style of a tweetstorm. But about code. For my website. Not for tweets.

- For a long time, we have been embracing specialization. It's taken for granted even more than capitalism. But maybe not as much as the sun rising in the morning

- From specialization comes modularization, inheritance, microservices, pizza teams, Conway's Law, and lots of other things we sometimes consider as righteous as apple pie.

- Specialization comes at a cost though. Because a specialized entity is specific, it is useless out of context. It cannot exist except for the support of other specialized things.

- Interconnectedness is the unintended consequence of specialization. Little things depend on other things.

- Those dependencies may prove surprising, fragile, unstable, chaotic, or create a bottleneck.

- Specialization also requires some level of infrastructure to even get started. You can't share code in a library until you have the infrastructure to import it at runtime (dynamic linking) or resolve the library's dependencies (package managers).

- The expensive open secret of microservices and disposable infrastructure is that you need a high level of operational acumen to even consider starting down the road.

- You're either going to buy this as a service, buy it as software you host, or build it yourself. Either way, you're going to pay for this decision right in the budget.

- On the flip side is generalization. The grand vision of interchangeable cogs that can work any project.

- A year ago I would have thought this was as foolish as microservices. But the ecosystems and tooling are getting really good. And, JavaScript is getting good enough and continues to have the most amazing reach across platforms and devices.

- A year ago I would have told you generalization is the foolish dream of the capitalist who wants to drive down his costs by treating every person as a commodity. I suspect this exists in parts of our trade, but developers are generally rare enough that finding a good one is difficult enough, let alone a good one that knows your ecosystem and domain already.

- Generalization gives you a cushion when you need help a short handed team get something out the door. You can shift a generalist over to take care of the dozen detail things so the existing team can stay focused on the core, important things. Shifting a generalist over for a day doesn't get you 8 developer hours, but it might get you 4 when you really need it.

- Generalization means more people can help each other. Anyone can grab anyone else and ask to pair, for a code review, for a sanity check, etc.

- When we speak of increasing our team's bus number, we are talking about generalizing along some axis. Ecosystem generalists, domain knowledge generalists, operational generalists, etc.

- On balance, I still want to make myself a T-shaped person. But, I think the top of the T is fatter than people think. Or, it's wider than it is tall, by a factor of one or two.

- Organizationally, I think we should choose what the tools and processes we use carefully so that we don't end up where only one or two people do something. That's creating fragility and overhead where it doesn't yield any benefit.

Categorizing and understanding magical code

Sometimes, programmers like to disparage “magical code”. They say magical code is causing their bugs, magical code is offensive to use, magical code is harder to understand, we should try to write “less magical” code.

“Wait, what’s magic?”, I hear you say. That’s what I’m here to talk about! (Warning: this post contains an above-average density of “air quotes”, ask your doctor if your heart is strong enough for “humorous quoting”.)

Magic is code I have yet to understand

It’s not inscrutable code. It’s not bad code. It doesn’t intentionally defy understanding, like an obfuscated code contest or code golfing.

I can start to understand why a big of code is frustratingly magical to me by categorizing it. (Hi, I’m Adam, I love categorizing things, it’s awful.)

“Mathemagical” code escapes my understanding due to its foundation in math and my lack of understanding therein. I recently read Purely Functional Data Structures, which is a great book, but the parts on proving e.g. worst-case cost for amortized operations on data structures are completely beyond my patience or confidence in math. Once Greek symbols enter the text, my brain kinda “nope!”s out.

“Metamagic” is hard to understand due to use of metaprogramming. Code that generates code inside code is a) really cool and b) a bit of a mind exploder at first. When it works, its glorious and not “magical”. When it falls short, it’s a mess of violated expectations and complaints about magic. PSA: don’t metaprogram when you can program.

“Sleight of hand” makes it harder for me to understand code because I don’t know where the control flow or logic goes. Combining inheritance and mixins when using Ruby is a good example of control flow sleight-of-hand. If a class extends Foo, includes Bar, and all three define a method do_the_thing, which one gets called (trick question: all of them, trick follow-up question: in what order!)? The Rails router is a good example of logical sleight-of-hand. If I’m wondering how root to: “some_controller/index” works and I have only the Rails sources on me, where would I start looking to find that logic? For the first few years of Rails, I’d dig around in various files before I found the trail to that answer.

“Multi-level magic schemes” is my new tongue-in-cheek way to explain a tool like tmux. It’s a wonderful tool for those of us who prefer to work in (several) shells all day. I’m terrified of when things go wrong with it, though. To multiplex several shells into one process while persisting that state across user sessions requires tmux to operate at the intersection of Unix shells, process trees, and redrawing interfaces to a terminal emulator. I understand the first two in isolation, but when you put it all together, my brain again “nope!”s out of trying to solve any problems that arise. Other multi-level magic schemes include object-relational mappers, game engines, operating system containers, and datacenter networking.

I can understand magic and so can you!

I’m writing this because I often see ineffective reactions to “magical” code. Namely, 1) identify code that is frustrating, 2) complain on Twitter or Slack, 3) there is no step 3. Getting frustrated is okay and normal! Contributing only negative energy to the situation is not.

Instead, once I find a thing frustrating, I try to step back and figure out what’s going on. How does this bit of code or tool work? Am I doing something that it recommends against or doesn’t expect? Can I get back on the “golden path” the code is built for? Can I find the code and understand what’s going on by reading it? Often some combination of these side quests puts me back on my way an out of frustration’s way.

Other times, I don’t have time for a side quest of understanding. If that’s the case, I make a mental note that “here be dragons” and try to work around it until I’m done with my main quest. Next time I come across that mental map and remember “oh, there were dragons here!”, I try to understand the situation a little better.

For example, I have a “barely tolerating” relationship with webpack. I’m glad it exists, it mostly works well, but I feel its human factors leave a lot to be desired. It took a few dives into how it works and how to configure it before I started to develop a mental model for what’s going on such that I didn’t feel like it was constantly burning me. I probably even complained about this in the confidence of friends, but for my own personal assurances, attached the caveat of “this is magical because it’s unfamiliar to me.”

Which brings me to my last caveat: all this advice works for me because I’ve been programming for quite a while. I have tons of knowledge, the kind anyone can read and the kind you have to win by experience, to draw upon. If you’re still in your first decade of programming, nearly everything will seem like magic. Worse, it’s hard to tell what’s useful magic, what’s virtuous magic, and what’s plain-old mediocre code. In that case: when you’re confronted with magic, consult me or your nearest Adam-like collaborator.

Bias to small, digestible review requests. When possible, try to break down your large refactor into smaller, easier to reason about changes, which can be reviewed in sequence (or better still, orthogonally). When your review request gets bigger than about 400 lines of code, ask yourself if it can be compartmentalized. If everyone is efficient at reviewing code as it is published, there’s no advantage to batching small changes together, and there are distinct disadvantages. The most dangerous outcome of a large review request is that reviewers are unable to sustain focus over many lines, and the code isn’t reviewed well or at all.

This has made code review of big features way more plausible on my current team. Large work is organized into epic branches which have review branches which are individually reviewed. This makes the final merge and review way more tractable.

Your description should tell the story of your change. It should not be an automated list of commits. Instead, you should talk about why you’re making the change, what problem you’re solving, what code you changed, what classes you introduced, how you tested it. The description should tell the reviewers what specific pieces of the change they should take extra care in reviewing.

This is a good start for a style guide ala git commits!

JavaScript's amazing reach

There’s plenty of room to criticize JavaScript as a technology, language, and community(s). But, when I’m optimistic, I think the big things JavaScript as a phenomenon brings to the world are:

- amazing reach: you can write JS for frontends, backends, games, art, music, devices, mobile, and domains I'm not even aware of

- a better on-ramp for people new to programming: the highly motivated can learn JS and not worry about the breadth of languages they may need to learn for operations, design, reporting, build tooling, etc.

- lots of those on-ramps: you could start learning JS to improve a spreadsheet, automate something in Salesforce, write a fun little Slack bot, etc.

In short, JavaScript increases the chances someone will level up their career. Maybe they’ll continue in sales or marketing but use JS as a secret weapon to get more done or avoid tedium. Maybe it gives them an opportunity to try programming without changing job functions or committing to a bootcamp program.

Bottom line: JavaScript, like Ruby and PHP before it, is the next thing that’s improving the chances non-programmers become programmers and reach the life-improving salary and career trajectories software developers have enjoyed for the past decade or two.

I welcome our future computer assistants...

…but they’re going to have to deal with the fact that my wife and I commonly have exchanges like this:

Me: can you hand me the thingy from the thing?Courtney: this one?

Me: which one?

Courtney: the one I’m pointing at

Me: I’m not looking at you

Courtney: this one

Me: the thingy!

Good luck, machine learners!

Computers are coming for more jobs than we think

A great video explainer on how computers and creative destruction are different this time. Why Automation is Different this Time. Hint: we need better ideas than individualism, “markets”, and supply-side economics for this to end well. Via Kottke.

Three Nice Qualities

One of my friends has been working on a sort of community software for several years now. Uniquely, this software, Uncommon, is designed to avoid invading and obstructing your life. From speaking with my Brian, it sounds like people often mistake this community for a forum or a social media group. That’s natural; we often understand new things by comparing or reducing them to old things we already understand.

The real Quality Uncommon is trying to embody is that of a small dinner party. How do people interact in these small social settings? How can software provide constructive social norms like you’d naturally observe in that setting?

I’m currently reading How Buildings Learn (also a video series). It’s about architecture, building design, fancy buildings, un-fancy buildings, pretty buildings, ugly buildings, etc. Mostly it’s about how buildings are suited for their occupants or not and whether those buildings can change over time to accommodate the current or future occupants. The main through-lines of the book are 1) function dictates form and 2) function is learned over time, not specified.

A building that embodies the Quality described by How Buildings Learn uses learning and change over time to become better. A building with the Quality answers 1) How does one design a building such that it can allow change over time while meeting the needs and wants of the customer paying for its current construction? and 2) How can the building learn about the functions its occupants need over time so that it changes at a lower cost than tearing it down and starting a new building?

Bret Victor has bigger ideas for computing. He seeks to design systems that help us explore and reason on big problems. Rather than using computers as blunt tools for doing the busy work of our day-to-day jobs as we currently do, we should build systems that help all of us think creatively at a higher level than we currently do.

Software that embodies that Quality is less like a screen and input device and more like a working library. Information you need, in the form of books and videos, line the walls. Where there are no books, there are whiteboards for brainstorming, sharing ideas, and keeping track of things. In the center of the room are wide, spacious desks; you can sit down to focus on working something through or stand and shuffle papers around to try and organize a problem such that an insight reveals itself. You don’t work at the computer, you work amongst the information.

They’re all good qualities. Let’s build ‘em all.

I have become an accomplished typist

Over the years, many hours in front of a computer have afforded me the gift of keyboarding skills. I’ve put in the Gladwellian ten thousand hours of work and it’s really paid off. I type fairly quickly, somewhat precisely, and often loudly.

Pursuant to this great talent, I’ve optimized my computer to have everything at-hand when I’m typing. I don’t religiously avoid the mouse. I do seek more ways to use the keyboard to get stuff done quickly and with ease. Thanks to tools like Alfred and Hammerspoon, I’ve acheived that.

With the greatest apologies to Bruce Springsteen:

Well I got this keyboard and I learned how to make it talk

Like every good documentary on accomplished performers, there’s a dark side to this keyboard computering talent I posess. There are downsides to my keyboard-centric lifestyle:

- I sometimes find it difficult to step back and think. Rather than take my hands off the keyboard, I could more easily switch to some other app. I feel like this means I'm still making progress, in the moment, but really I'm distracting myself.

- Even when I don't need to step back and think, it's easy for me to switch over to another app and distract myself with social media, team chat, etc.

- Being really, really good at keyboarding is almost contrary to Bret Victor's notion of using computers as tools for thinking rather than self-contained all-doing virtual workspaces.

- Thus I often find I need to push the keyboard away from me, roll my chair back, and think, read, or write to do the deep thinking.

All that said, when I am in the zone, my fingers dance over this keyboard, I think with my fingers, and it’s great.

The annoying browser boundaries

Since I started writing web applications in around 1999, there’s been an ever-present boundary around what you can do in a browser. As browsers have improved, we have a new line in the sand. They’re more enabling now, but equally annoying.

Applications running on servers in a datacenter (Ruby, Python, PHP) can’t:

- interact with a user’s data (for largely good reason)

- make interesting graphics

- hop over to a user’s computer(s) without tremendous effort (i.e. people running and securing their own servers)

Browser applications can’t:

- store data on the user’s computer in useful quantities (this recently became less true, but isn’t widely used yet)

- compute very hard; you can’t run the latest games or intense math within a browser

- hang around on a user’s computer; browsers are a sandbox of their own, built around ephemeral information retrieval and not long-term functionality

- present themselves for discovery in various vendor app stores (iTunes, Play, Steam, etc.)

It may seem like native applications are the way to go. They can do all the things browsers cannot. But!

- native apps are more susceptible to the changing needs of their operating system host, may go stale (look outdated) or outright not work anymore after several years

- often struggle to find a sustainable mechanism for exchanging a user’s money for a developer’s time; part of that is the royalty model paid to platforms and stores, part of that is the difficulty of business inherent to building any application

- cannot exceed the resources of one user’s computer, except for a few very high-end professional media production applications

In practice, this means there’s a step before building an application where I figure out where some functionality lives. “This needs to think real hard, it goes on a very specific kind of server. This needs to store some data so it has to go on that other server. That needs to present the data to the user, so I have to bridge the server and browser. But we’d really like to put this in app stores soooo, how are we going to get something resembling a native app without putting a lot of extra effort into it?”

There’s entirely good reasons that this dichotomy has emerged, but it’s kinda dumb too. In other words, paraphrasing Churchill:

Browsers are the worst form of cross-platform development, except for all the others.

Connective blogging tissue, then and now

I miss the blogging scene circa 2001-2006. This was an era of near-peak enthusiasm for me. One of those moments where a random rock was turned over and what lay underneath was fascinating, positive, energizing, captivating, and led me to a better place in my life and career.

As is noted by many notable bloggers, those days are gone. Blogs are not quite what they used to be. People, lots of them!, do social media differently now.

Around 2004, amidst the decline of peer-to-peer technologies, I had a hunch that decentralized technology was going to lose out to centralization. Lo and behold, Friendster then MySpace then Facebook then Twitter made this real. People, I think, will always look to a Big Name first and look to run their own infrastructure nearly last.

In light of that, I still think the lost infrastructure of social media is worth considering. As we stare down the barrel of a US administration that is likely far less benevolent with its use of an enormous propaganda and surveillance mechanism, should we swim upstream of the ease of centralization and decentralize again?

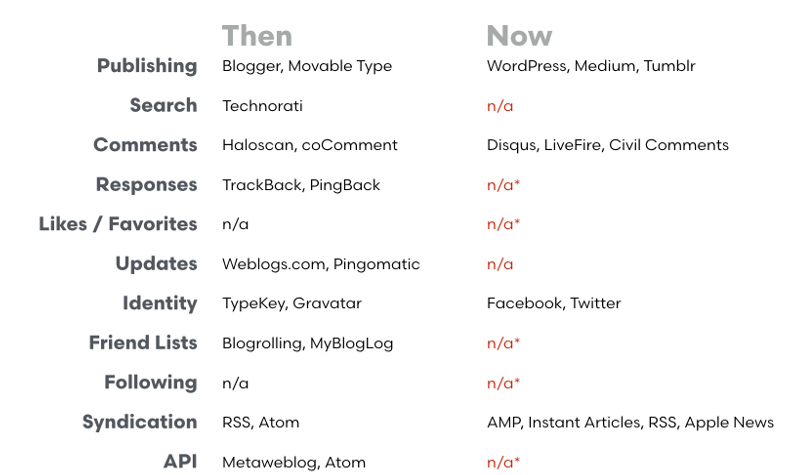

Consider this chart identifying community and commercially run infrastructure that used to exist and what has, in some cases, succeeded it:

[caption id=“attachment_3832” align=“alignnone” width=“796”] Connective tissue, then and now[/caption]

Connective tissue, then and now[/caption]

I look over that chart and think, yeah a lot of this would be cool to build again.

Would people gravitate towards it? Maybe.

Could it help pop filter bubbles, social sorting, fake news and trust relationships? Doesn’t seem worth doing if it can’t.

Do people want to run their identity separate of the Facebook/Twitter/LinkedIn behemoth? I suspect what we saw as a blog back then is now a “pro-sumer” application, a low cost way for writers, analysts, and creatives to establish themselves.

Maybe Twitter and Facebook are the perfect footprint for someone who just wants to air some steam about their boss, politics, or a fellow parent? It’s OK if people want to express their personality and opinions in someone else’s walled garden. I think what we learned in 2016 is that the walled gardens are more problematic than merely commercialism, though.

That seems pessimistic. And maybe missing the point. You can’t bring back the 2003-6 heyday of blogging a decade later. You have to make something else. It has to fit the contemporary needs and move us forward. It has to again capture the qualities of fascinating, positive, energizing, captivating, and leading to a better place.

I hope we figure it out and have another great idea party.