My summer at 100 hertz

Is there a lost art to writing code without a text editor, or even a (passable) computer? It sounds romantic, I’ve done it before, I tried it again, and…it was not that great. 🤷🏻♂️

0. An archaic summer, even by the standards of the late 1990s

The summer of 2002 was my last semester interning at Texas Instruments. I was tasked with writing tests verifying the next iteration of the flagship DSP for the company, the ‘C64141. This particular chip did not yet exist; I was doing pre-silicon verification.

At the time, that meant I used the compiler toolchain for its ‘C64xx predecessors to build C programs and verify them on a development board (again, with one of the chip’s predecessors) for correctness. Then, I shipped the same compiled code off to a cluster of Sun machines2and ran the program on a gate-level simulation of the new chip based on the hardware definition language (VHDL, I think) of the as-yet physically existent chip3.

The output of this execution was a rather large output file (hundreds of megabytes, IIRC) that captured the voltage levels through many4 of the wires on the chip5. Armed with digital analyzer software (read: it could show me if a wire were high or low voltage i.e., if its value was 0 or 1 in binary), and someone telling me how to group together the wires that represented the main registers on the chip, I could step through the state of my program by examining the register values one cycle at a time.

Beyond the toolchain and workflow, which is now considered archaic and generally received by younger colleagues as an “in the snow, uphill, both ways” kind of story, I faced another complication. As you can imagine if you work out what “running a gate-level simulation of a late-90’s era computer chip on late-90’s era Sun computers” implies, from first principles, you’ll realize that at a useful level of fidelity this kind of computation is phenomenally expensive.

In fact, I had plenty of time to contemplate this and one day in fact did so. Programs that took about a minute to compile, load into a development board, and execute ran over the course of an hour on the simulator. Handily, the simulator output included wall-clock runtime and number of cycles to execute. And so I divided one by the other and came to a rough estimate that the simulator ran programs at less than 100hz; the final silicon’s clock speed was expected to hit 6-700MHz if I recall correctly.

I was not very productive that summer6! But it does return me to the point of this essay: the time when it was better to think through a program than write it and see what the computer thought of it was not that great.

1. Coding “offline” sounds romantic, was not that great

I like to imagine software development in mythological halls like Bell Labs and Xerox PARC worked like this:

- You wrote memos on a typewriter, scribbled models and data structures in notebooks, or worked out an idea on a chalkboard.

- Once you worked out that your idea was good, with colleagues over coffee or in your head, you started writing it out in what we’d call, today, a low-level language like C or assembly or gasp machine code.

- If you go far back enough, you turn a literally handwritten program into a typed-in program on a very low-capacity disk or a stack of punch cards.

- By means that had disappeared way earlier than I started programming, you convey that disk or stack of cards to an operator, who mediated access to an actual computer and eventually gave you back the results of running your program.

The further you go back in computing history, the more this is how the ideas must have happened. Even in the boring places and non-hallowed halls.

I missed the transition point by about a decade,7 as I was starting to write software in the late nineties. Computers were fast enough to edit, compile, and run programs such that you could think about programming nearly interactively. The computational surplus reached the point that you could interact with a REPL, shell, or compiler fast enough to keep context in one’s head without distraction from “compiler’s running, time for coffee”8.

2a. Snow-cloning the idea to modern times

In an era of video meetings and team chats and real-time docs, this older way of working sounds somewhat relaxing. 🤷🏻♂️ Probably I’m a little romantic about an era I didn’t live through, and it was a bit miserable. Shuffled or damaged punch cards, failed batch jobs, etc.

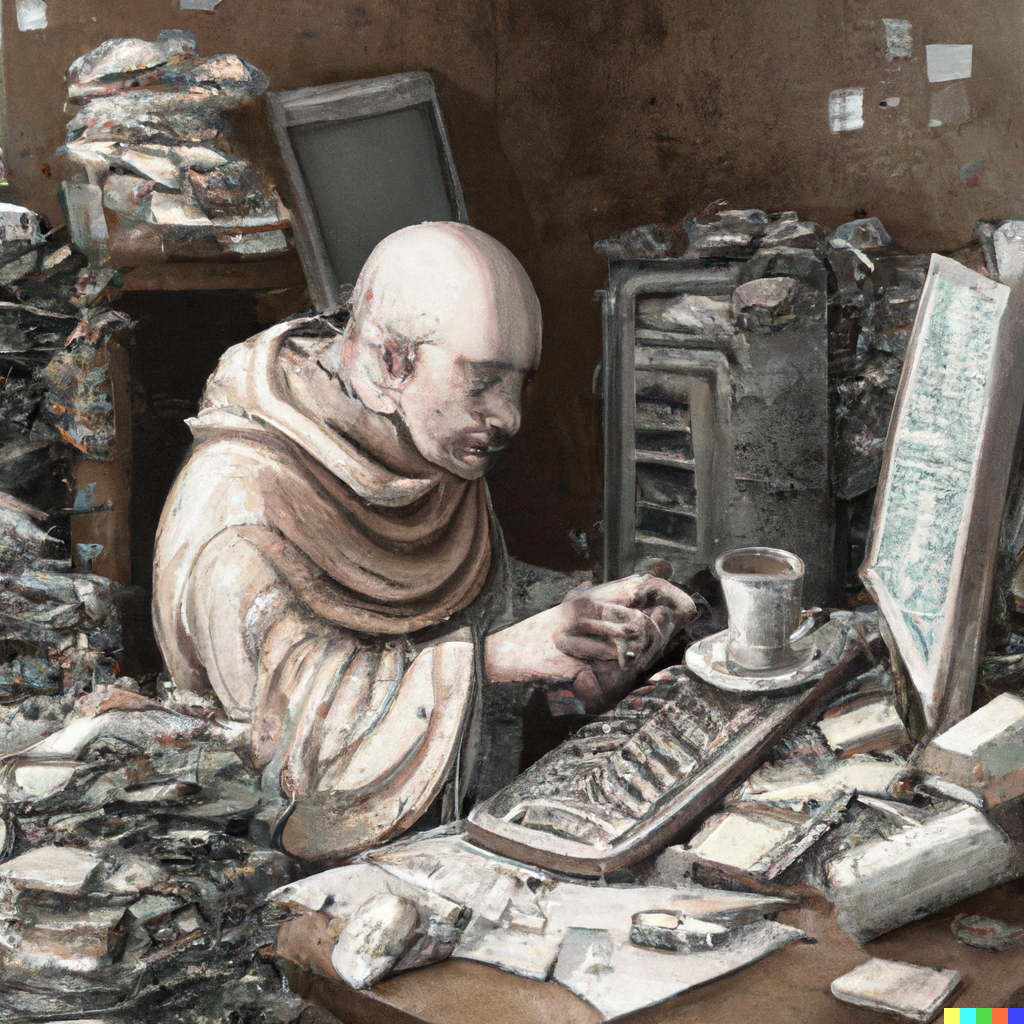

I like starting projects away from a computer, in a notebook or whiteboard. Draw all the ideas out, sketch some pseudocode. Expand on ideas, draw connections, scratch things out, make annotations, draw pictures. So much of that is nigh impossible in a text editor. Linear text areas and rectangular canvases, no matter how infinite, don’t allow for it.

But, there’s something about using only your wits and hands to wrestle with a problem. A different kind of scrutiny you get from writing down a solution, examining it. Scratching a mistake out, scribbling a corner case to the side. Noticing something I’ve glazed over, physically adjusting your body to center it in your field-of-vision, and thinking more deeply about. I end up wondering if I should do more offline coding.

2b. Ways of programming “offline” that weren’t that great in 2023

Sketching out programs/diagrams on an iPad was not as good as I’d hoped. Applications that hint at the hardware’s paper- and whiteboard-like promise exist. But it’s still a potential future, not a current reality. The screen is too small, drawing lag exists (in software, at least), and the tactility isn’t quite right.

Writing out programs, long-hand, on paper or on a tablet is not great. I tried writing out Ruby to a high-level of fidelity9. Despite Ruby’s potential for concision, it was still like drinking a milkshake through a tiny straw. It felt my brain could think faster than I could jot code down and it made me a little dizzy to boot.

Writing pseudocode was a little more promising. Again, stylus and tablet isn’t the best, but it’s fine in a pinch. By hand on paper/notebooks/index cards is okay if you don’t mind scratching things out and generally making a mess10. Writing out pseudocode in digital notes, e.g. in fenced code blocks within a Markdown document, is an okay to get a short code out and stay in a “thinking” mindset rather than “programming”.

3. Avoid the computer until it’s time to compute some things

To an absurd reduction, we use programming to turn billions of arithmetic operations into a semblance of thinking in the form of designs, documents, source files, presentations, diagrams, etc. But, rarely is the computer the best way of arriving at that thinking. More often, it’s better to step away from the computer and scribble or draw or brainstorm.

(Aside: the pop culture of workspaces and workflows and YouTube personalities and personal knowledge management and “I will turn you into a productivity machine!” courses widely misses on the mark on actually generating good ideas and how poorly it fits into a highly engaged social media post, image, or video.)

Find a reason to step away from the computer

Next time you’re getting started on a project, try grabbing a blank whiteboard/stack of index cards/sheet of paper. Write out the problem and start scribbling out ideas, factors to consider, ways you may have solved it before.

Even better: next time you find yourself stuck, do the same.

Better still: next time you find a gnarly problem inside a problem you previously thought you were on your way to solving, grab that blank canvas, step away from the computer, and dump your mental state onto it. Then, start investigating how to reduce the gnarly problem to a manageable one.

- The TMS320C64x chips were unique in that they resembled a GPU more than a CPU. They had multiple instruction pipelines which the compiler or application developer had to try to get the most out of. It didn’t work out in terms of popularity, but it was great fun to think about. ↩

- …in a vaguely-named and ambiguously-located datacenter somewhere. Cloud computing! ↩

- Are we having fun yet? In retrospect, yes. ↩

- I don’t think it was all of the wires in the chip, as even at the time that would have been hundreds of millions of datapoints per cycle. ↩

- Again, simulated and not yet extant 🤯 ↩

- Excruciatingly so, by my current standards. ↩

- Modulo writing programs to run in gate-level simulations. ↩

- At least, for smaller programs in some languages. ↩

- i.e. few omitted syntactic idioms ↩

- Arguably, the point of working in physical forms is to make a mess, IMO. ↩